Testing graph databases

One of the main components of GUAC is a graph database to store all the metadata pertaining to the software supply chain. Although I promised a while back to have a series of articles about security concepts around software development life-cycle this won’t be that article. Here, I only want to focus on the graph databases, testing several for the GUAC usecase. There is an issue to analyze and select a database backend (by default, GUAC architecture allows replacing the backend behind the GraphQL interface, the backend where the metadata is actually stored).

We started GUAC with a Neo4j backend, which we then partially migrated to the GraphQL design. But, there are several issues (including licensing questions) regarding this solution and this requires us to switch to another database. There is a suggestion to use ArangoDB, which is currently work in progress (almost completed by the time this gets published, slightly more than a month after starting the experiments described within).

I am using this article to study various graph database backends, both because these would help GUAC but also because some of my (future/potential) side projects involve graph databases. Thus, the results from here do not imply that GUAC will change to the database backend. Instead this should be taken only as a personal exploration.

Besides the 2 databases mentioned above, I will consider 4 others:

- Cayley: inspired by Google’s graph database, selected as I have been following the repository for several years, when I was last exploring alternatives to Neo4j;

- TerminusDB: wants to be

Git for data

, offering a user experience that would be very similar to using Git. Also interesting to look at since the codebase uses significant amounts of Prolog. - JanusGraph: Part of the Linux Foundation, integrated visualizations, in use by several large companies, main database to play with Gremlin/Tinkerpop.

- NebulaGraph: High speed processing, milliseconds of latency, benchmarked to be the fastest of Neo4j, JanusGraph and itself.

This gives a total of 6 databases to test. But, before doing the actual performance testing, let’s compare these 6 databases across non-functional dimensions, as summarized in the next table (where each database name is a link to the section of this post where the performance analysis and results for that backend is included). Since the post is long, you can see the final conclusion and a summary of all results in this section.

| Database | Development language(s) | Language clients | License | Has Docker | In NixOS | ||

|---|---|---|---|---|---|---|---|

| Go | Python | Haskell | |||||

| Neo4j | Java | ✅ | ✅ | 🟡1 | GPLv3, commercial2 |

✅ | ✅ |

| ArangoDB | C++ | ✅ | ✅ | ❌ | Apache2 | ✅ | ✅ |

| Cayley | Go | ✅ | 🟡3 | 🟡4 | Apache2 | ✅ | ✅ |

| TerminusDB | Prolog/Rust | ❌ | ✅ | ❌ | Apache2 | ✅ | ❌ |

| JanusGraph | Java | 🟡5 | 🟡6 | 🟡7 | Apache2, CC-BY-4.08 |

✅ | 🟡9 |

| NebulaGraph | C++ | ✅ | ✅ | ❌ | Apache2 | ✅ | ❌ |

It seems that the only language that all these databases have a driver for is Python (with some caveats). Haskell support is severely lacking, as the only existing packages are out of date or are very generic. Fortunately, all these databases use Docker (with caveats). As such, the testing scenario will use Python language and connect to the database running in a Docker container. It is possible that in the final projects I’ll use the NixOS deployment directly, but that is out of scope for this post.

Testing scenario 🔗

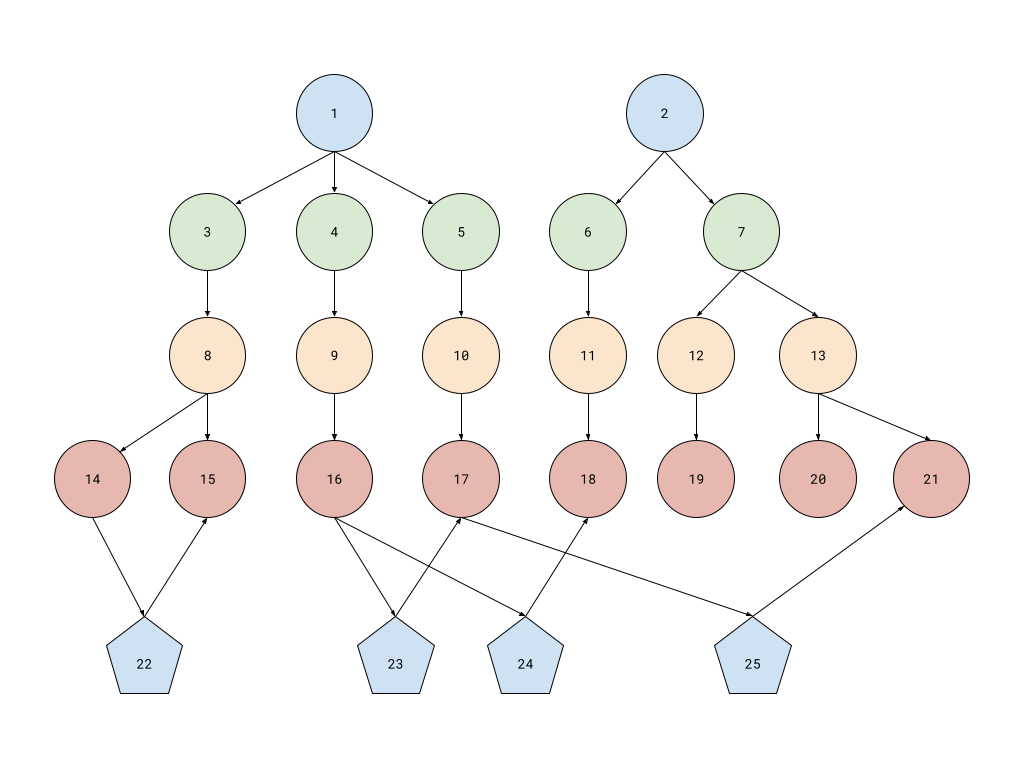

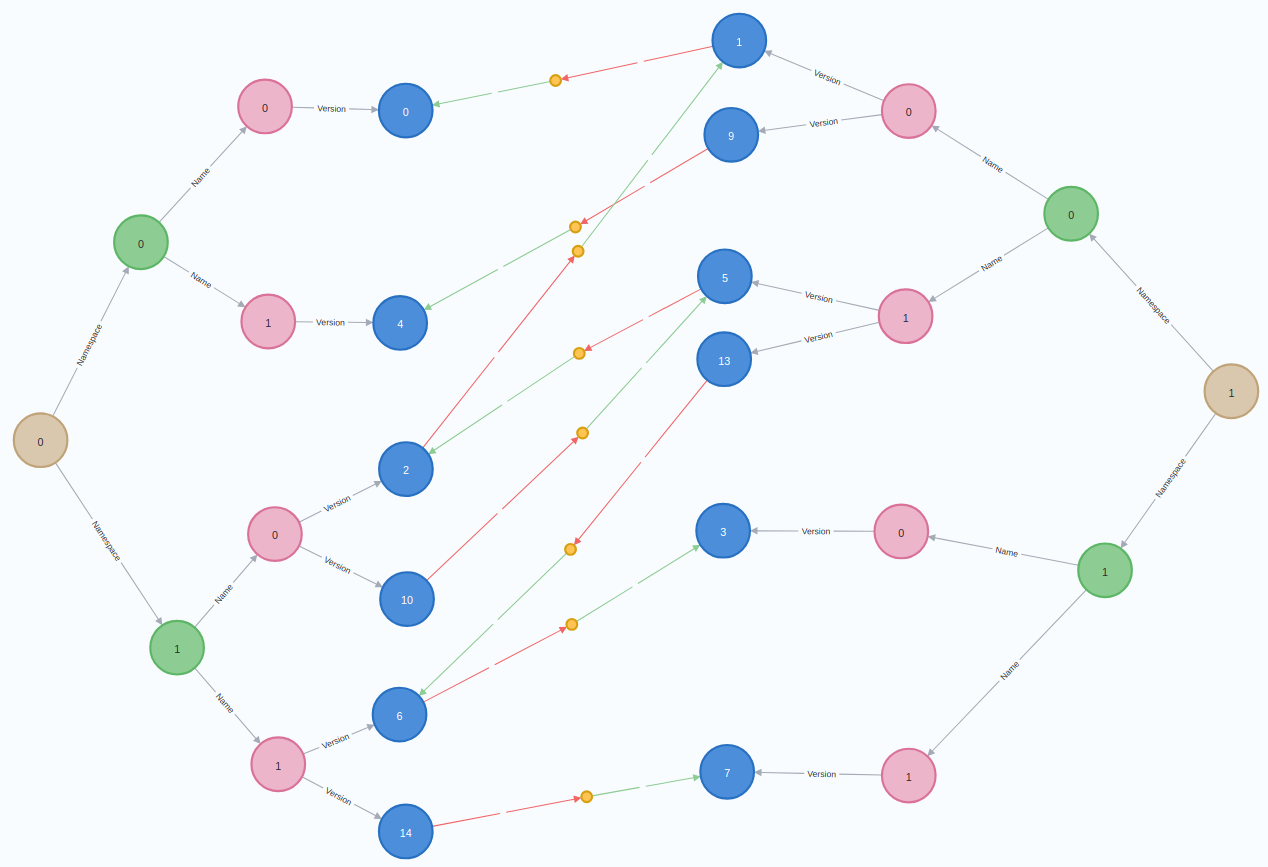

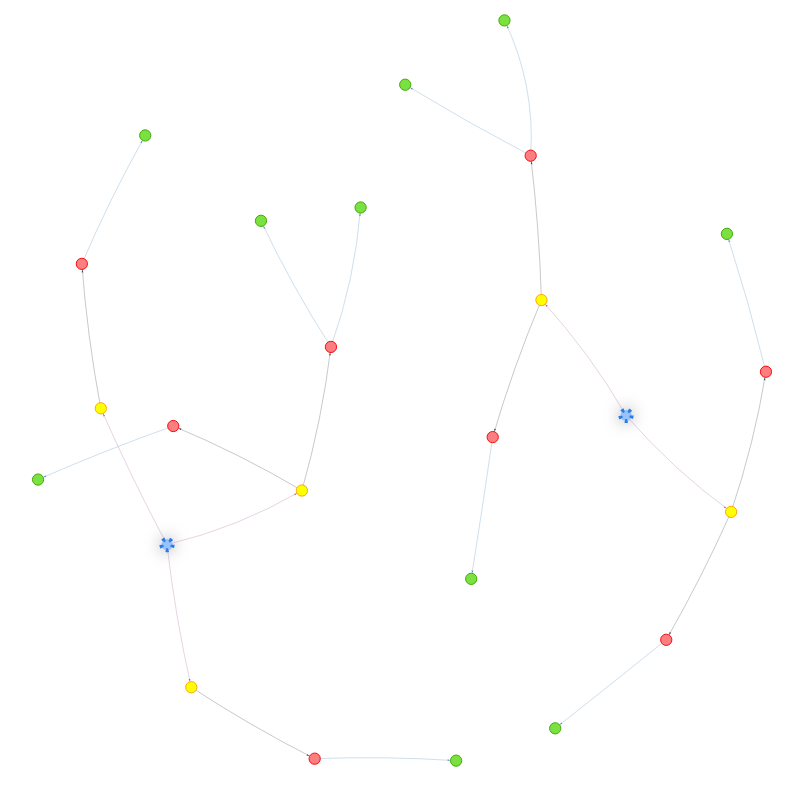

Since my own personal projects are only at ideas stage – meaning the usecases are not yet clearly defined –, the testing performed in this article will simulate scenarios needed in GUAC. Fortunately, there is some overlap with the incipient ideas I have in mind. In broad lines, we want to ingest packages and dependencies between them. We will use something similar to GUAC’s Package ontology: for each package we need 4 different nodes: one for the type of the ecosystem, one for an optional namespace that the ecosystem might support, one for the name of the package and one for the version. These should match the pURL format for package identifiers, removing the qualifiers and subpath optional fields. For the dependencies, we will only add one additional node linking 2 version nodes, as in the following image:

Here, nodes 1 and 2 represent two different ecosystems (e.g., PyPI and

npm). Nodes 3 to 7 represent various namespaces. Nodes 8-13 represent

different package names and nodes 14 to 21 represent various versions of these

packages. Nodes 22 to 25 represent dependency information such as:

- node 22 states that package 14 depends on package 15. These are 2 versions of the same package name, so this is either a bad configuration or some scenario which would require bootstrapping;

- nodes 23 and 24 show two dependencies of package 16, one of which (18) is in the other ecosystem;

- node 25 also records a cross-ecosystem dependency. It also records a transitive dependency chain between 16 and 21 (16 - 23 - 17 - 25 - 21).

Note: this is a simplification of the data model from GUAC, but it should cover the critical parts. This means that the results from here will not translate 100%, but we will still have some ideas about forward paths.

Data generation 🔗

Since I am interested in how these databases scale, I need to be able to ingest a wide range of package. Hence, instead of using real data we’ll have to use fake, synthetic input. This can be done by picking a number \(N\) for the total number of packages to ingest and representing each package by a number \(x\), from \(0\) to \(N-1\). To build the pURL description, we can start writing \(x\) in a base \(B\) (equivalent to a branching factor in the package trie): we have \(x = x_0 + x_1B + x_2B^2 + \ldots\) and from this we can extract the other components that identify the package:

- package ecosystem: node \(x_0\) of the root of the trie

- package namespace: node \(x_1\) of the package ecosystem selected above

- package name: node \(x_2\) of the package namespace selected above

- package version: a new child of the name node selected above, labeled \(x\) (the full package name)

That is, the pURL for a package labeled \(x\) is

pkg:\(x_0\)/\(x_1\)/\(x_2\)@\(x\). This has really interesting properties, as

we will see in a short while.

Moving to the dependencies between these packages, since this is another dimension we need to test scalability on, let’s pick a number \(K\) to denote the maximum number of dependencies each package can have. Then, for a package \(x\), we consider it to depend on the set \(\left\{ \left[\frac{x}{k}\right] \phantom{1}|\phantom{1} k \in \{2, 3, \ldots K + 1\}\right\}\). That is, take all numbers \(k\) from 2 to \(K+1\) (so that there are \(K\) of them), divide \(x\) by \(k\) and record a dependency to the quotient, if one was not already present. For example, package \(100\) can depend on packages \(50, 33, 25, 20, \ldots\) (the first \(K\) of these), whereas package \(2\) can only depend on packages \(1\) and \(0\) (if \(K = 1\) then only on package \(1\)). As \(K\) increases, the connectivity between nodes in the graph increases fast. If \(N\) is large enough, nodes can have up to \(2K + \frac{K(K+1)}{2}\) total dependencies (for example if \(K=3\), node \(x=6\) has 3 dependencies – 1, 2, 3 – and is in the dependency set of 12, 13, 18, 19, 20, 24, 25, 26, and 27). The proof of this statement is left as an exercise to the reader :)

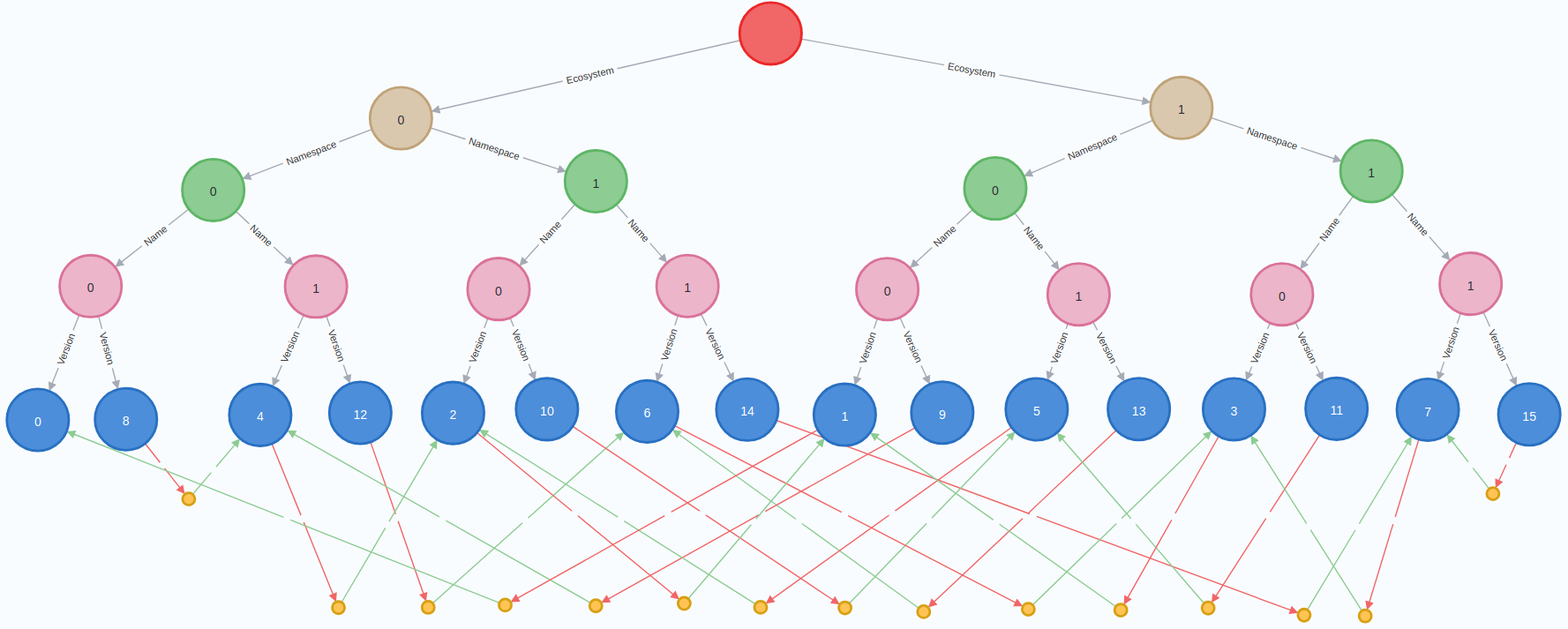

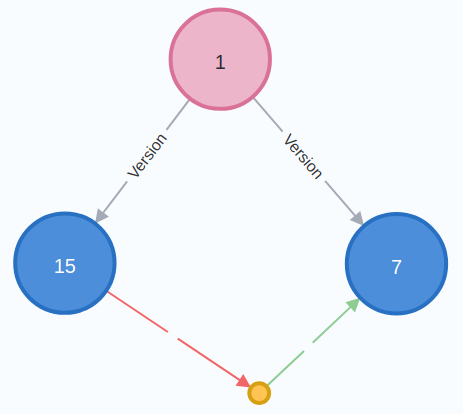

An ingestion scenario is uniquely identified by the 3 numbers, \(N\), \(B\), and \(K\). For example, the following image is the result of ingesting \(N=16\) packages, with a branching factor of \(B=2\) and each package having at most \(K=1\) dependencies:

Here, the root of the package trie is the single empty red node, ecosystems are marked by the 2 brown nodes, namespaces by the 4 green ones, package names by the 8 pink ones, packages (i.e., package versions) by the 16 blue ones and dependencies by the small yellow nodes. The dependency information is encoded by a red arrow, followed by a dependency node, followed by a green arrow to the dependency.

This method of generating data brings a few useful properties that can be used to validate that the results of queries/ingestions are valid (this is especially useful when doing ingestion on multiple threads):

- Cross ecosystem dependencies are dependencies where the parent and the dependency packages have different remainders when divided by \(B\). For example, \(14\) and \(7\) have different parity, thus this is a cross ecosystem dependency in the scenario from the picture above.

- Dependencies between versions of the same package (invalid configurations, or recording a need to bootstrap) are dependencies where the parent and the dependency packages have the same values for \(x_0\), \(x_1\) and \(x_2\). That is, they are equal modulo \(B^3\). In the image above, this is the case of packages \(15\) and \(7\) (if we were to use \(K=2\) then we would also have had a similar situation for \(11\) and \(3\)).

It is trivial to prove these properties, so they are left as an exercise to the reader :)

Ingestion tests 🔗

When ingesting the data, there are 2 major scenarios to consider: ingesting only packages (which is in general a write-only query, to add the missing nodes), and ingesting dependencies (which will need to locate existing packages and then add the dependency node between them – we assume, like in GUAC, that each dependency node is created between packages already ingested, as this should simplify the queries on the backend).

Ingesting packages can be done in 2 ways: ingest packages one by one as they are “found” (generated from \(x\)) and ingest packages all at once, in a batch (an extreme case, in general we might want to ingest batches of up to a certain number of packages). That is:

- For each \(x\), ingest package \(x\).

- Collect all packages in a list, ingest the list.

In the first case, we can ingest packages in increasing order or in a random order, to detect cases where the ingestion pattern might be relevant for performance. To simplify testing, all randomness will be generated with a fixed seed randomly selected to be 42.

Ingesting dependencies can be done in 3 different ways: ingest each dependency one by one (in order or shuffled) (by ingesting the 2 packages and then the dependency node), ingest all dependencies of each package individually (in order or shuffled) (by first collecting all dependency packages in a list, batch ingesting the parent package and the list of dependencies and then batch ingesting the dependency nodes), ingesting all dependencies at once in a batch. That is:

- For each \(x\), generate a list of up to \(K\) dependencies. For each dependency in the list, ingest \(x\) and the dependency package and then the dependency node. It is obvious that this results in duplicated effort, as package \(x\) will be attempted to be ingested \(K\) times, but \(K-1\) times it would be present in the database already.

- For each \(x\), generate a list of up to \(K\) dependencies. Then, from this list collect the set of all packages and ingest them all in a batch. When this is done, ingest all dependency nodes in a batch.

- Collect all packages in a list, expand the list to contain all dependencies

(

concatMap). Then, collect all packages and batch ingest them, then batch ingest all dependency nodes.

Thus, there are 5 different scenarios for ingestion. For each on of them we can define some qualifiers:

- ingest in increasing order or ingest in a shuffled order;

- ingest with all relevant indexes present or without any database index;

- ingest in a single thread or in multiple threads.

The last mode is not valid when ingesting everything in a big batch (all packages at once or all dependencies at once) since we’re doing a single database call but with large amount of data.

Between each ingestion experiment the database will be dropped, removing all nodes and indexes.

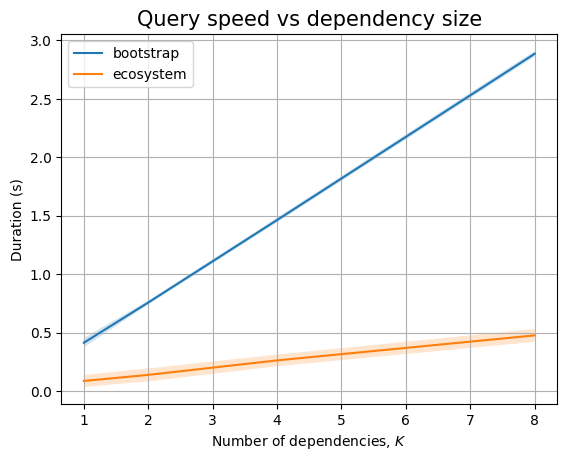

Query tests 🔗

After we run all ingestion experiments, we’ll perform one more ingestion and then run the following query experiments to test read-only performance:

Find all dependency paths that are within the same package name (i.e., invalid, or requiring bootstrapping). In the graph above, this should return only the set \(\{15, 7\}\).

Find all dependency paths that cross ecosystem boundaries. In the graph above, this is:

\[\{\{14, 7\},\{13, 6\},\{10, 5\},\{9, 4\},\{6, 3\},\{5, 2\},\{2, 1\},\{1, 0\}\}\]

These are testing simple graph patterns and the results have vastly different cardinalities. These queries might easily translate to SQL, but larger path queries might not. However, due to length constraints, I decided to leave more advanced path queries for another article, running them only on a subset of these databases.

Since in general these queries are run by a single user, each will be run in single threaded environment. However, the performance of these queries might still be influenced by the presence of indexes, so we have 2 run modes.

Generic code 🔗

There is a large amount of code that we’ll have to write. First, we can begin with some trivial imports and then define 2 dataclasses to hold packages and dependencies:

import argparse

import concurrent.futures

import math

import random

import requests # optional, needed for some databases

import retry # optional, needed for some ArangoDB cases

import time # optional, needed for some databases

from collections import defaultdict

from dataclasses import asdict, dataclass

from datetime import datetime

from typing import Callable, Self, Sequence

@dataclass(frozen=True)

class Package:

ecosystem: str

namespace: str

name: str

version: str

@dataclass(frozen=True)

class Dependency:

parent: Package

target: PackageNow, we can define the general class for an experiment. First, the initialization/construction parts:

class Experiment:

def __init__(self, N: int, B: int, K: int, shuffle: bool = False,

threaded: bool = False):

self.N = N

self.B = B

self.K = K

self.shuffle = shuffle

self.threaded = threaded

def setup(self, indexes: bool = True):

pass

#...In __init__ we pass values for \(N\), \(B\) and \(K\) and also store the

qualifiers for whether the experiment should run on multiple threads, or

shuffle the data before ingestion.

Since each database needs to run specific code during initialization (e.g.,

set up indexes, set up initial structure), I decided to separate that into a

setup function10. For the base base there is nothing to do here.

Next thing to define are 2 helper methods that process a value \(x\) and generate the package pURL or the list of dependencies for the package:

class Experiment:

#...

def _generate_package(self, x: int) -> Package:

version = x

ecosystem, x = x % self.B, x // self.B

namespace, x = x % self.B, x // self.B

name = x % self.B

return Package(f'{ecosystem}', f'{namespace}', f'{name}', f'{version}')

def _generate_dependencies(self, x: int) -> Sequence[Dependency]:

deps = []

old = x

parent = self._generate_package(x)

for k in reversed(range(2, self.K + 2)):

target = x // k

if target == old: continue

target = self._generate_package(target)

deps.append(Dependency(parent, target))

return deps

#...Since we don’t want to duplicate edges (e.g., for \(x=4\) and \(K=4\), because \([4/3]=1\) and \([4/4]=1\) we would naively draw 2 edges between \(4\) and \(1\)), we generate the dependencies in reverse order and compare with the last ingested dependency.

Looking at the ingestion experiments, there are 2 categories: ones that run in a batch, ingesting everything all at once, and those that ingest one package/dependency one by one. Since we don’t want to duplicate code, let’s define runner functions to take care of timing, shuffling, and adding threading support as needed:

class Experiment:

#...

def _experiment(self, work: Callable[int, None], reverse: bool = False):

start = datetime.now()

seq = range(self.N)

if self.shuffle:

random.seed(42)

seq = random.sample(seq, self.N)

elif reverse:

seq = reversed(seq)

if self.threaded:

with concurrent.futures.ThreadPoolExecutor() as executor:

futures = [executor.submit(work, x) for x in seq]

for future in concurrent.futures.as_completed(futures):

pass # no results to return

else:

for x in seq:

work(x)

end = datetime.now()

print(f'Duration: {end - start}')

def _batch_experiment(self, work: Callable[None, None]):

start = datetime.now()

work()

end = datetime.now()

print(f'Duration: {end - start}')

#...Observe how for batches there is no threading support and no shuffling defined here. Shuffling will be done when creating the work item, but threading is irrelevant since there is only one call to the database backend.

Now, we can define the ingestion experiments. First, the packages:

class Experiment:

#...

def ingest_packages(self):

def work(x):

self._do_ingest_package(self._generate_package(x))

self._experiment(work)

def batch_ingest_packages(self):

def work():

pkgs = [self._generate_package(x) for x in range(self.N)]

if self.shuffle:

random.seed(42)

pkgs = random.sample(pkgs, self.N)

self._do_ingest_batch_packages(pkgs)

self._batch_experiment(work)

#...We’re defining an inner work worker (using a worker-wrapper

pattern from Haskell world) and passing that to the 2

experiment runners. It could be possible to remove the duplication for the

shuffling in batch_ingest_packages, but that refactoring is also left

as an exercise to the reader.

Similarly, we can define the 3 methods to ingest dependencies:

class Experiment:

#...

def ingest_dependencies(self):

def work(x):

for dependency in self._generate_dependencies(x):

self._do_ingest_package(dependency.parent)

self._do_ingest_package(dependency.target)

self._do_ingest_dependency(dependency)

self._experiment(work, reverse=True)

def split_ingest_dependencies(self):

def work(x):

deps = self._generate_dependencies(x)

if not deps: return

pkgs = [self._generate_package(x)]

for dependency in deps:

pkgs.append(dependency.target)

self._do_ingest_batch_packages(pkgs)

self._do_ingest_batch_dependencies(deps)

self._experiment(work, reverse=True)

def batch_ingest_dependencies(self):

def work():

pkgs = [self._generate_package(x) for x in range(self.N)]

deps = []

for x in range(self.N):

deps.extend(self._generate_dependencies(x))

if self.shuffle:

random.seed(42)

pkgs = random.sample(pkgs, len(pkgs))

deps = random.sample(deps, len(deps))

self._do_ingest_batch_packages(pkgs)

self._do_ingest_batch_dependencies(deps)

self._batch_experiment(work)

#...The first method ingests data as it is generated, whereas the other two try to batch it, as discussed in the previous section. Note that the more advanced ingestion methods have been optimized from the naive versions listed below:

class Experiment:

#...

def naive_split_ingest_dependencies(self):

def work(x):

pkgs = set()

deps = self._generate_dependencies(x)

for dependency in deps:

pkgs.add(dependency.parent)

pkgs.add(dependency.target)

if pkgs: self._do_ingest_batch_packages(pkgs)

if deps: self._do_ingest_batch_dependencies(deps)

self._experiment(work, reverse=True)

def naive_batch_ingest_dependencies(self):

def work():

deps = set() # can also be a list -- exercise to the reader :)

for x in reversed(range(self.N)):

deps = deps.union(self._generate_dependencies(x))

pkgs = set()

for dependency in deps:

pkgs.add(dependency.parent)

pkgs.add(dependency.target)

if self.shuffle:

random.seed(42)

pkgs = random.sample(pkgs, len(pkgs))

deps = random.sample(deps, len(deps))

if pkgs: self._do_ingest_batch_packages(pkgs)

if deps: self._do_ingest_batch_dependencies(deps)

self._batch_experiment(work)

#...These versions try to use set operations to remove duplicates (and

under real world scenarios we would have to use these). However, our

experiments will run with very large numbers of batches, making the set

operations be really expensive. The naive versions can be up to 100 times

slower than the optimized ones! Since we know patterns in data (e.g, for split

ingestion we always have the same parent package and each target is a new

package; for batch ingestion we have all packages and all dependencies), we

can remove the need for set operations. This speed gain only affects data

generation, so it wouldn’t pollute the database benchmarks.

Next, let’s write the runners for the read-only queries. Here, I decided to not write a new helper, opting instead for a slightly repeated code:

class Experiment:

#...

def query_bootstrap(self):

start = datetime.now()

pkgPairs = self._do_query_bootstrap()

end = datetime.now()

print(f'Duration: {end - start}')

valid = True

B3 = self.B * self.B * self.B

for parent, target in pkgPairs:

parent = int(parent)

target = int(target)

if parent % B3 != target % B3:

valid = False

break

if valid:

pairs = set()

for x in range(self.N):

for k in range(2, self.K + 2):

y = x // k

if x == y: continue

if x % B3 == y % B3:

pairs.add((x, y))

print(f'Valid: {valid and len(pairs) == len(pkgPairs)}')

def query_ecosystem(self):

start = datetime.now()

pkgPairs = self._do_query_ecosystem()

end = datetime.now()

print(f'Duration: {end - start}')

valid = True

for parent, target in pkgPairs:

parent = int(parent)

target = int(target)

if parent % self.B == target % self.B:

valid = False

break

if valid:

pairs = set()

for x in range(self.N):

for k in range(2, self.K + 2):

y = x // k

if x == y: continue

if x % self.B != y % self.B:

pairs.add((x, y))

print(f'Valid: {valid and len(pairs) == len(pkgPairs)}')

#...In both cases, we only measure the time taken to communicate with the database, and not the verification process. This is because in a real application we will not have a way or a need to validate the query results. This code exists only to validate that the ingestion was successful as we are experimenting with different query patterns. Each validation takes into account the properties we have discovered in previous sections and makes sure that only the valid answers are generated.

In order to control when the database gets cleared (both data and indices), we will also have a worker-wrapper pattern:

class Experiment:

#...

def clean(self):

self._do_clean()

#...This is not included as part of __del__ as we want to control when the

dataset gets cleared.

There is one remaining thing to do. We have seen a few helper functions above to perform the actual ingestion/querying, so we need to define them:

class Experiment:

#...

def _do_ingest_package(self, package: Package):

pass

def _do_ingest_batch_packages(self, packages: Sequence[Package]):

pass

def _do_ingest_dependency(self, dependency: Dependency):

pass

def _do_ingest_batch_dependencies(self, dependencies: Sequence[Dependency]):

pass

def _do_query_bootstrap(self):

return [] # no data stored

def _do_query_ecosystem(self):

return [] # no data stored

def _do_clean(self):

passThese are all empty/trivial. This is because we want each backend to only

implement these (as well as setup, __init__, and __del__

as needed) – this is the strategy design pattern. This also gives

us the benefit that we can measure the time needed to generate the synthetic

data. Thus, we can efficiently measure the time it takes to ingest this data

for each database backend, even in cases where data generation and data

ingestion are interleaved. In the GUAC case, this is almost the difference

between ingesting packages from the guacone command versus ingesting them

from the GraphQL API.

Finally, we need to write the boilerplate needed to set-up all the experiments and run them. I chose to start each experiment from the command line instead of writing a large script as this allows me to inspect the dataset, try various hypotheses, etc. Without further ado, here it is:

backends = {

'none': Experiment,

'neo4j': Neo4j,

'arango': Arango,

'cayley': Cayley,

'terminus': TerminusDB,

'janus': JanusGraph,

'nebula': NebulaGraph,

}

experiments = {

'packages': Experiment.ingest_packages,

'batch_packages': Experiment.batch_ingest_packages,

'dependencies': Experiment.ingest_dependencies,

'split_dependencies': Experiment.split_ingest_dependencies,

'batch_dependencies': Experiment.batch_ingest_dependencies,

# read queries

'bootstrap': Experiment.query_bootstrap,

'ecosystem': Experiment.query_ecosystem,

# cleanup

'clean': Experiment.clean,

}

def positive_int(arg:str):

x = int(arg)

if x <= 0:

raise ValueError("Argument must be strictly positive")

return x

def main():

parser = argparse.ArgumentParser(description="Benchmark Graph DB impls")

parser.add_argument('backend', help="Backend to use", choices=backends)

parser.add_argument('experiment', help="Experiment to run", choices=experiments)

parser.add_argument('N', type=positive_int, help="Number of packages")

parser.add_argument('B', type=positive_int, help="Branching factor")

parser.add_argument('K', type=positive_int, help="Dependencies/package")

parser.add_argument('-i', '--indexes', action='store_true', help="Create indexes in DB before ingestion")

parser.add_argument('-s', '--shuffle', action='store_true', help="Shuffle data before ingestion")

parser.add_argument('-t', '--threaded', action='store_true', help="Use threads to ingest")

result = parser.parse_args()

print(result)

experiment = backends[result.backend](N=result.N, B=result.B, K=result.K,

shuffle=result.shuffle,

threaded=result.threaded)

experiment.setup(result.indexes)

experiments[result.experiment](experiment)

if __name__ == '__main__':

main()Using argparse makes it easier to specify experiment qualifiers instead

of having to manually pass in True/False arguments if all

experiments were started using only positional arguments. I’m printing the

result of parsing the command line arguments to log each experiment to

a file which I can then process to extract the timing information11.

With this setup done, it is time to start benchmarking. We can benchmark the

none backend to test data generation, and the performance results for

this mode are summarized here. Each database will have its own

section to discuss implementation and then present the benchmark results.

Performance of the databases 🔗

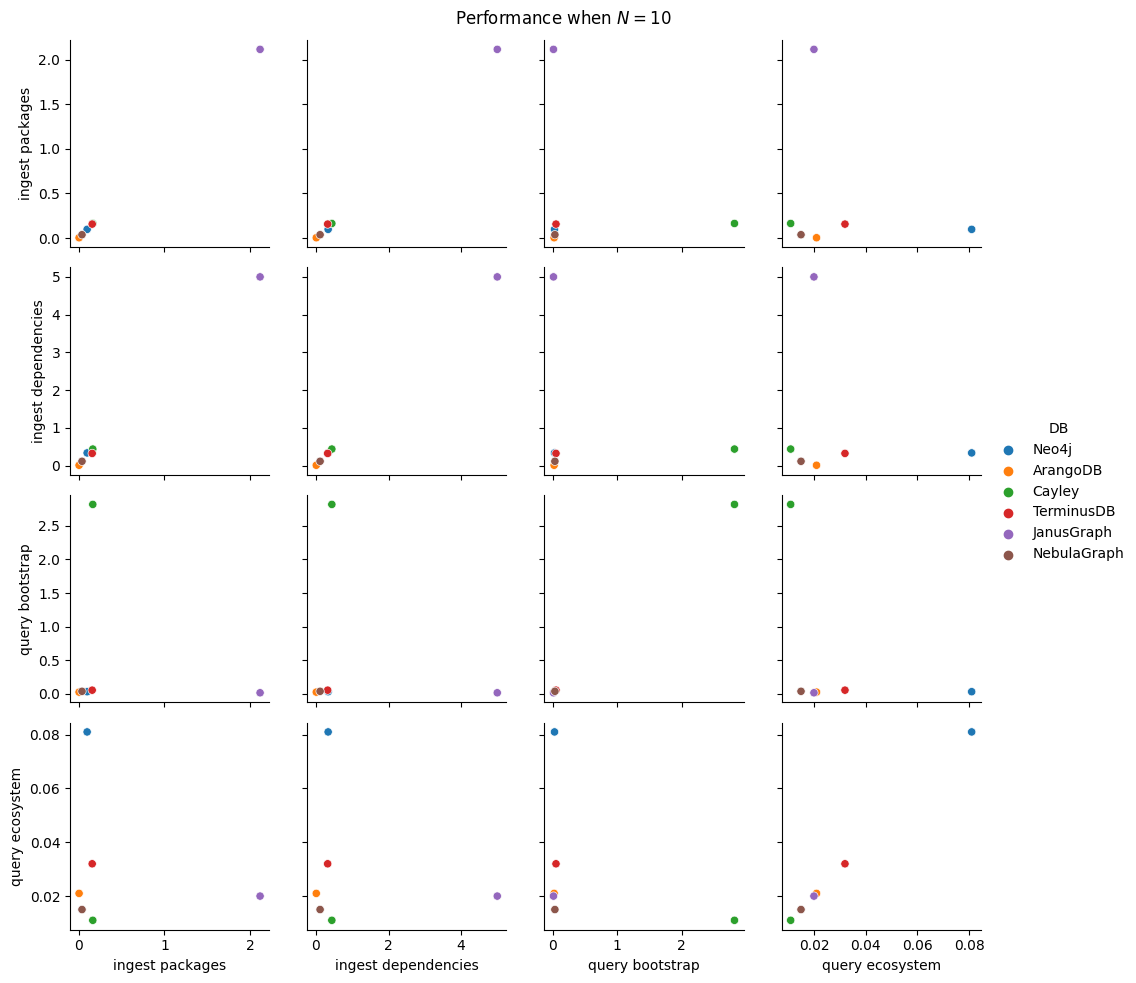

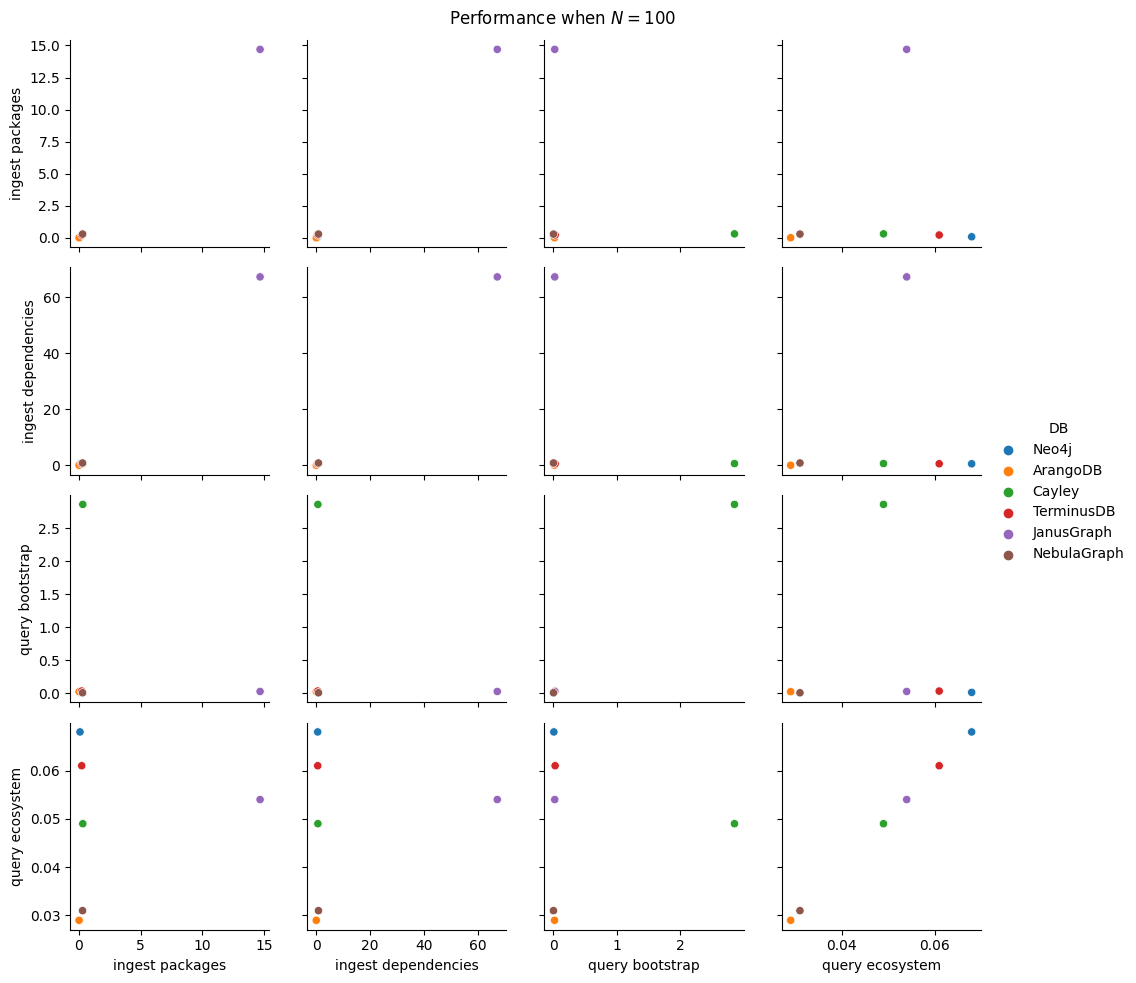

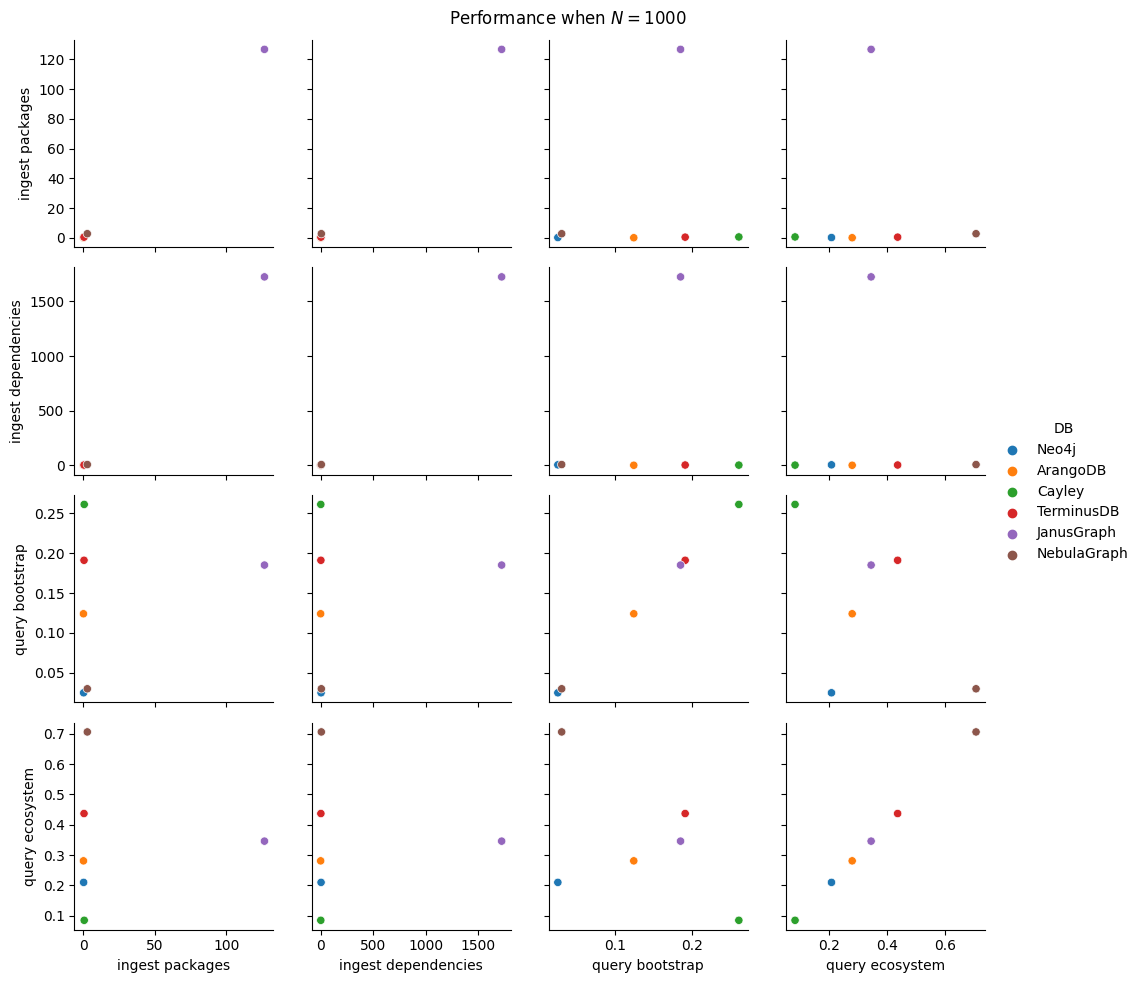

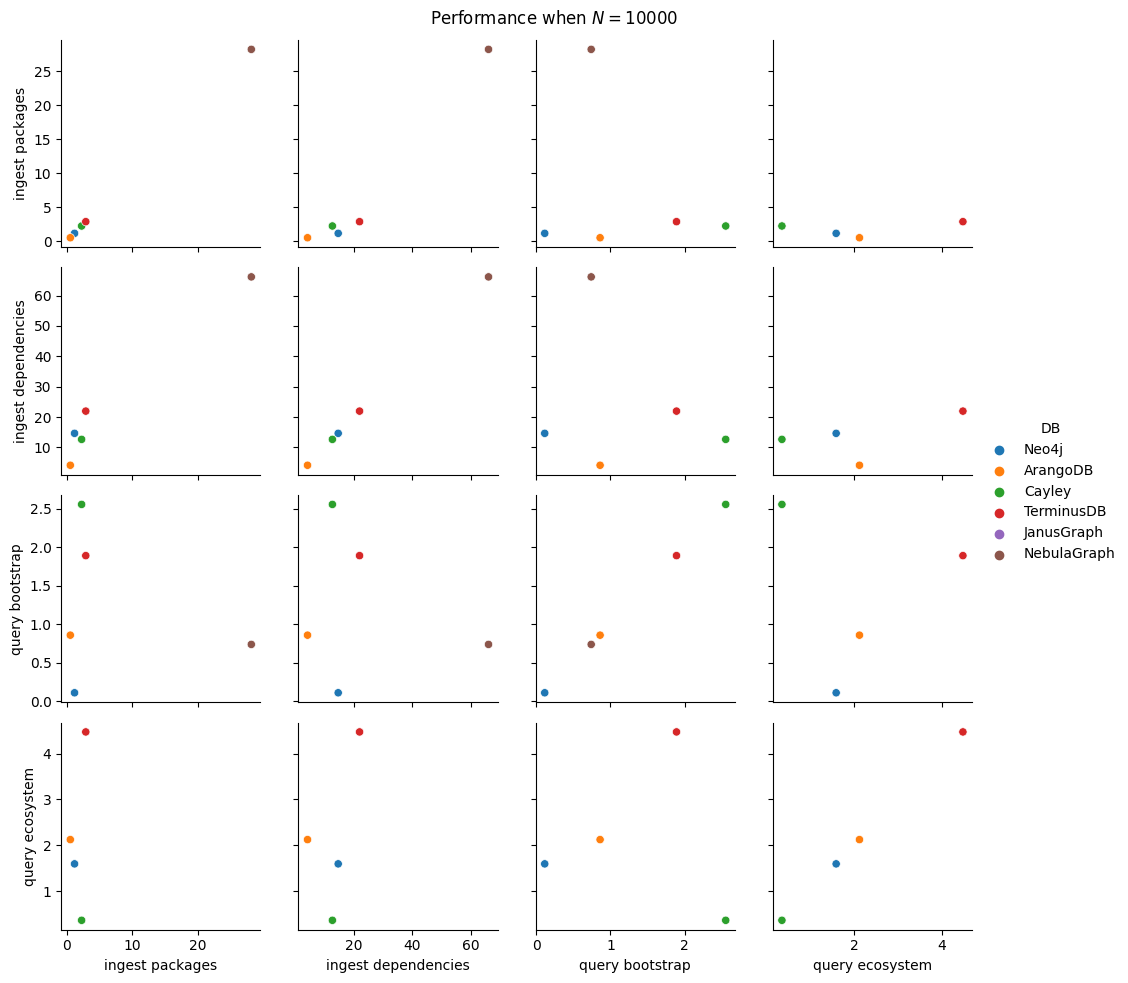

While developing the code for the benchmarks, I used \(N=10\) or \(N=16\), \(B=2\) or \(B=3\), and \(K=1\) up to \(K=4\). But these scenarios are small data, and we cannot extract much significant information from these.

For the experiments, I was planning to run ingestion using \(N=10, N=100, \ldots, N=1000000\) (1 million) packages. For each of these, I would test with

\(B=2, B=3\), and \(B=10\). Finally, for \(K\) I would use 4 values: \(1, 2, 5\) and

\(10\). Each of these would be run in all the possible modes (all combinations

of passing the -i/-s/-t flags or not). Each experiment would be run 5

times to compute statistical averages.

But we’re not doing that :). We know from the previous article that we need to do some experimental design, since the number of combinations is really large. As described in the previous paragraph, there are 2880 experiments to run. Assuming an upper bound of half an hour for each, this would take a total of 2 months to complete, excluding all the time spent experimenting with different queries, learning the database backends, fixing errors, using the computer for other things12.

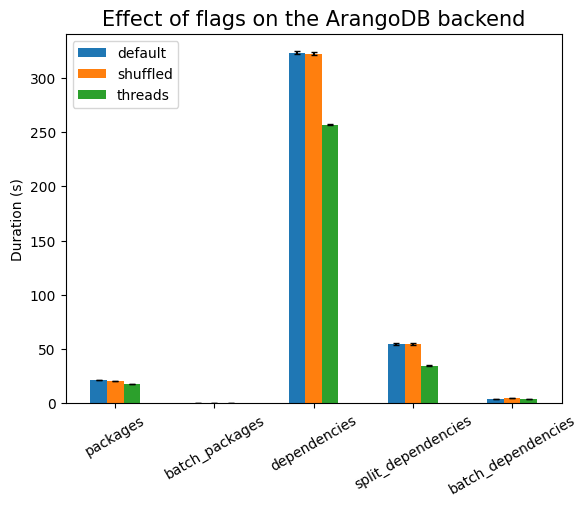

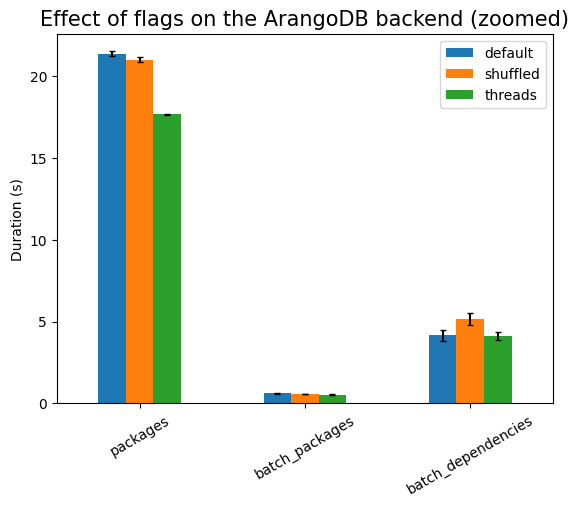

Instead, let’s use an alternate strategy. First we’ll try to determine the impact of each of the 3 flags: keeping \(N=10000\), \(B=5\), \(K=5\), we will run each ingestion experiment in 4 settings: no flag set, or exactly one the flags being set. This results in 20 benchmark types.

Similarly, after finishing the ingestion with no flag (or the ingestion with

-i), we will run the 2 queries, thus studying the impact of the index on the

read queries. This brings an additional of 4 benchmark types.

For future benchmark types, since we know the impact of the flags, we will

always run with the combination of -i/-t that gives the most performance.

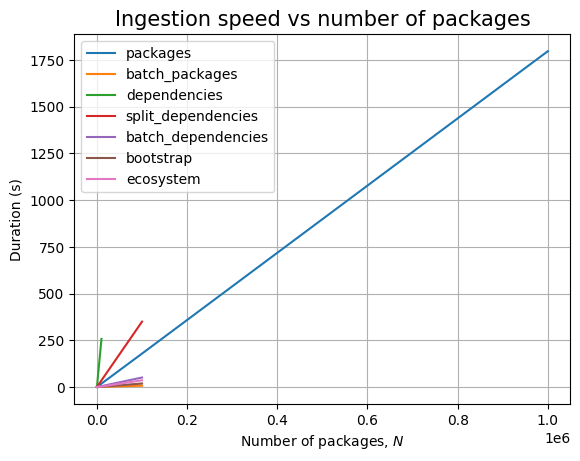

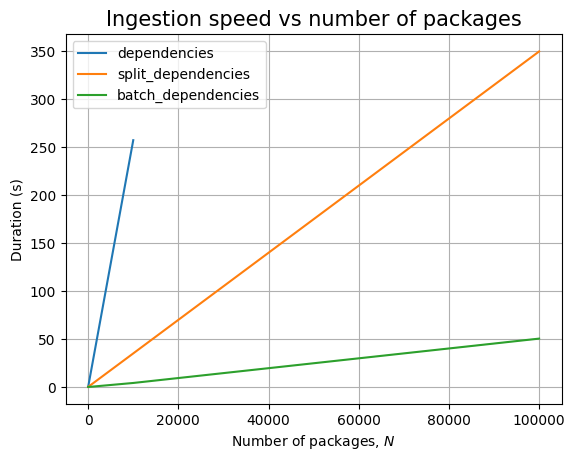

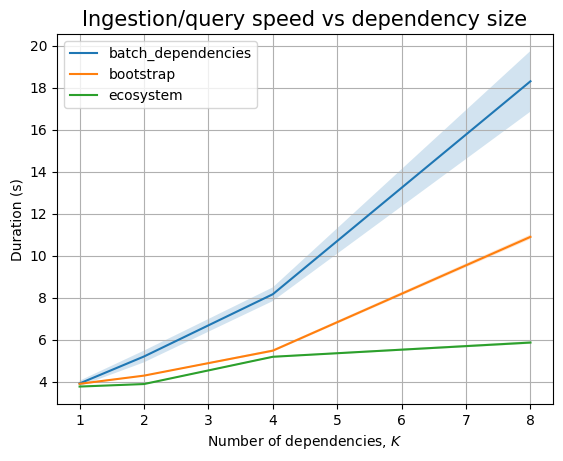

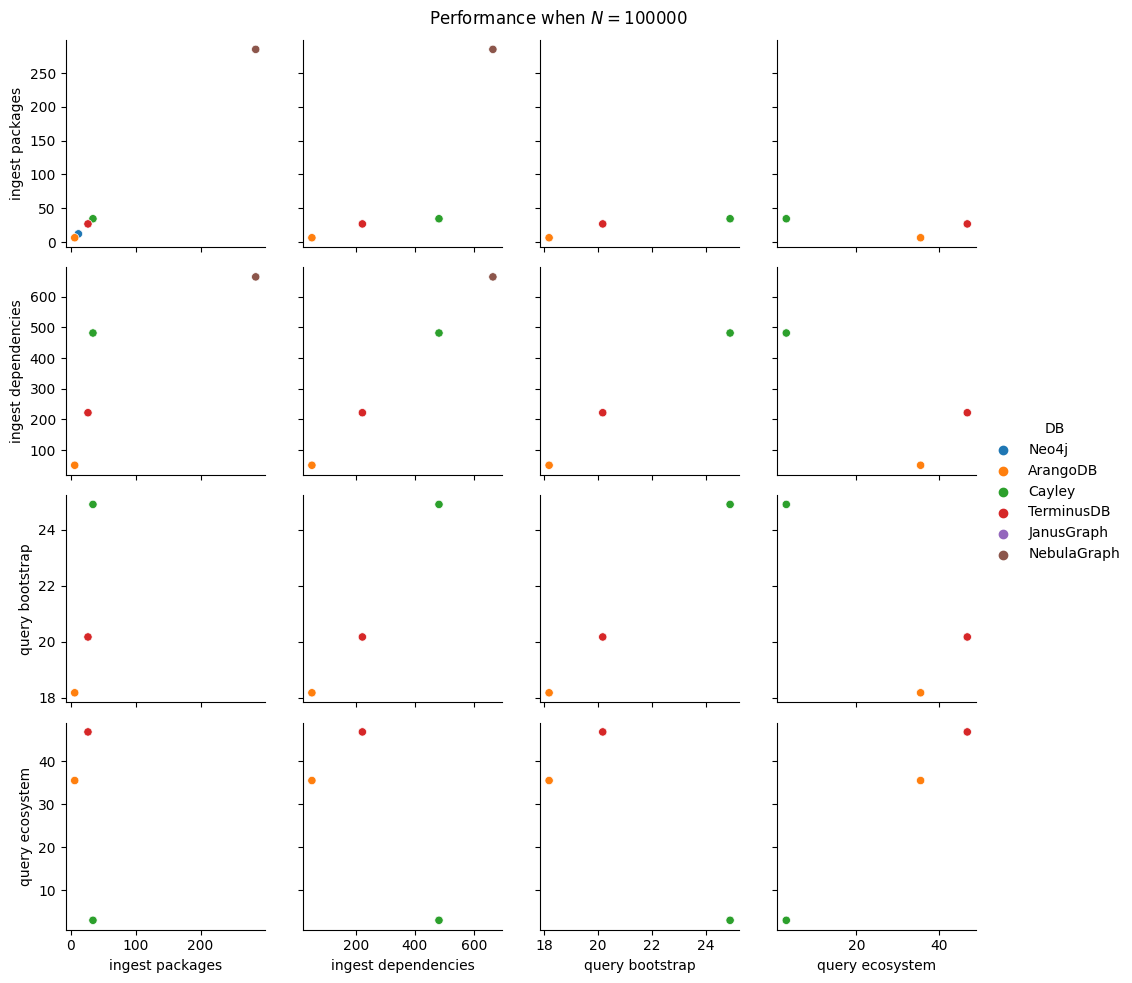

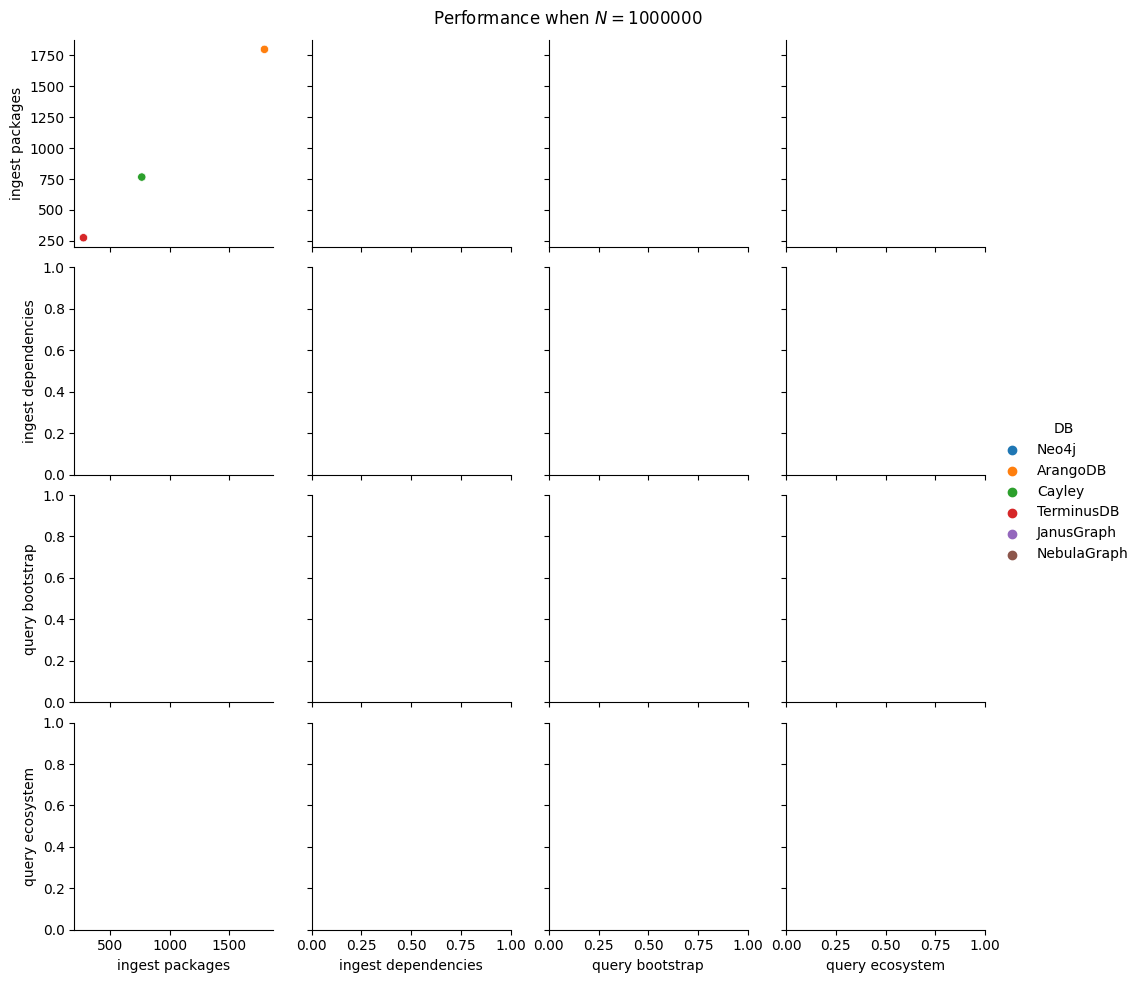

Next thing to study is the impact of increasing \(N\). We fix \(B=5\), \(K=5\) as before and increase \(N\) by consecutive powers of 10 (\(10, 100, 1000, 10000, 100000, 1000000\)). For each of these values we run all 5 ingestion experiments and the 2 query ones. This adds 35 new benchmark types (since the 10k values are already measured), but we will cut this process short as soon as one ingestion takes more than 30 minutes. For batch ingestion, it is also possible to run out of memory for the driver, so that will also result in a pruning of the search space.

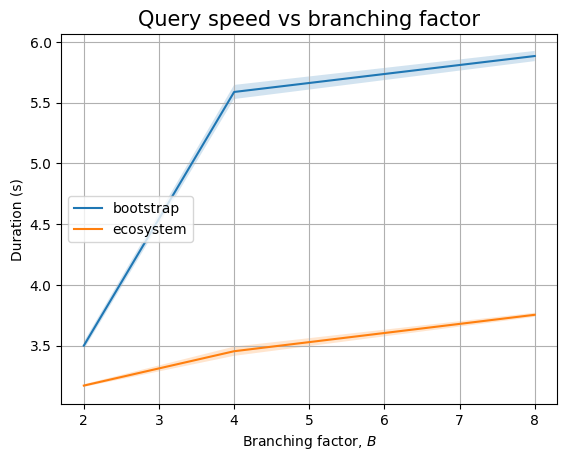

Then, we can study the impact of \(B\). We revert to \(N=10000\) and test the impact of \(B=2\), \(B=4\) and \(B=8\) for both ingestion and querying. This adds 21 more benchmark types.

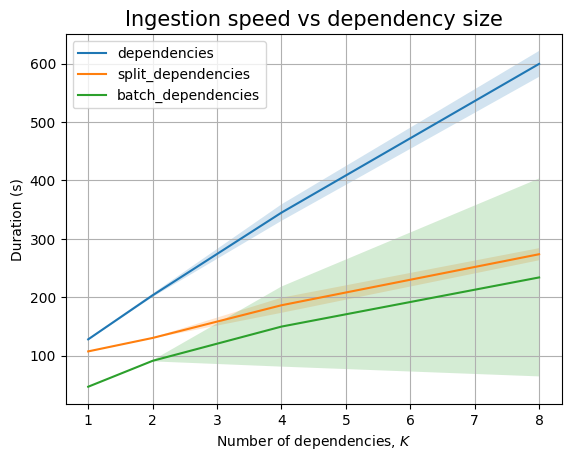

The value of \(K\) is only relevant when we have dependencies, so we no longer need to study the ingestion of packages by themselves. Let’s use \(N=10000\), \(B=5\) and study \(K=1\), \(K=2\), \(K=4\), \(K=8\). This gives 20 more benchmark types.

In total, we have 100 benchmark types, a significant reduction (6x!) from the entire space of parameters from the start of this section. We’ll run each of them 5 times, setting battery on performance mode (as per the study on battery power mode impact on performance) and having no other user applications opened.

Even with this design, we can collect up to 500 data points. So, instead of presenting the full data, I will only summarize it. This way, the blog post won’t be made artificially long by inclusion of tables with 500 rows.

Note: Between each run for an ingestion benchmark, I’ll drop the database, deleting all indexes that might be present. The database from the last round of ingestion with dependencies will be used for the query benchmarks.

To compute the time taken by database alone (that is, to eliminate time taken to generate the test harness), we can assume that all measurements are normally distributed and then use the fact that the difference of two normal distributions is also a normal distribution.

Finally, note that in some cases there might be multiple possible options to write a query, with different performance implications. If that is the case, I will include a discussion of each, but will include in the final data only the query that seems to be the most performant. I am not an expert in these databases, so I’m leaving open the option for some expert to write the queries to be more efficient, at which point I will update the article as needed.

That’s all, now we can begin the study.

No backend 🔗

We begin first with the baseline that does no database call. There’s nothing to do here: we already discussed the test harness code, so now we only have to present the data tables and graphs:

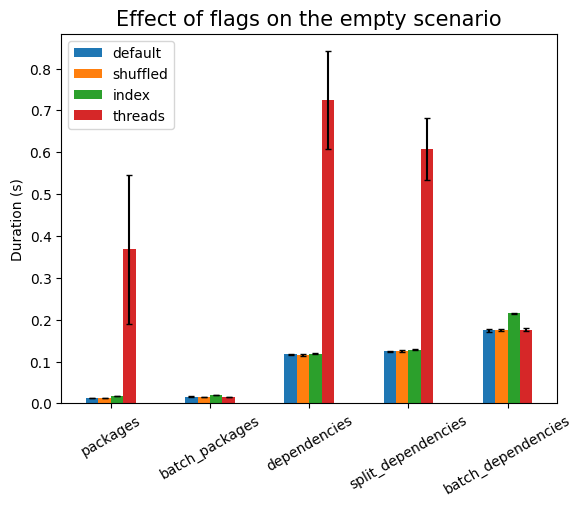

First experiment is testing the effect of the runtime flags:

| Experiment | type | time (s) | stddev (s) |

|---|---|---|---|

packages |

0.014 | ±0.000 | |

| index | 0.014 | ±0.000 | |

| shuffled | 0.017 | ±0.000 | |

| threads | 0.368 | ±0.179 | |

batch_packages |

0.016 | ±0.001 | |

| index | 0.016 | ±0.000 | |

| shuffled | 0.020 | ±0.000 | |

| threads | 0.016 | ±0.000 | |

dependencies |

0.117 | ±0.001 | |

| index | 0.115 | ±0.002 | |

| shuffled | 0.119 | ±0.001 | |

| threads | 0.724 | ±0.117 | |

split_dependencies |

0.125 | ±0.001 | |

| index | 0.125 | ±0.002 | |

| shuffled | 0.128 | ±0.001 | |

| threads | 0.608 | ±0.073 | |

batch_dependencies |

0.175 | ±0.004 | |

| index | 0.176 | ±0.002 | |

| shuffled | 0.215 | ±0.002 | |

| threads | 0.176 | ±0.004 |

We don’t include the measurements for the time to execute the read queries

since those methods are just pass in the empty implementation.

As expected, shuffling the data has to take longer, but there should be no

statistically significant difference between using indexes or not (since there

are no indexes). Using threads is much more expensive here, due to the setup

work needed, except in the cases of batch_*, where there is exactly one

single database call (that is, only one active thread). The setup cost also

explains the large noise in that measurement, compared to the other ones.

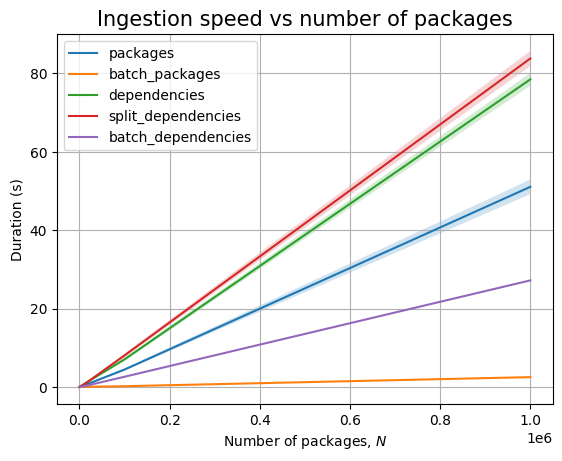

Next, we can see the effect of the number of packages, \(N\):

| Experiment | N | time (s) | stddev (s) |

|---|---|---|---|

packages |

10 | 0.003 | ±0.000 |

| 100 | 0.007 | ±0.000 | |

| 1000 | 0.025 | ±0.011 | |

| 10000 | 0.368 | ±0.179 | |

| 100000 | 4.432 | ±0.296 | |

| 1000000 | 51.061 | ±1.867 | |

batch_packages |

10 | 0.000 | ±0.000 |

| 100 | 0.000 | ±0.000 | |

| 1000 | 0.002 | ±0.000 | |

| 10000 | 0.016 | ±0.000 | |

| 100000 | 0.183 | ±0.004 | |

| 1000000 | 2.500 | ±0.081 | |

dependencies |

10 | 0.004 | ±0.000 |

| 100 | 0.009 | ±0.000 | |

| 1000 | 0.038 | ±0.009 | |

| 10000 | 0.724 | ±0.117 | |

| 100000 | 7.033 | ±0.416 | |

| 1000000 | 78.492 | ±1.603 | |

split_dependencies |

10 | 0.004 | ±0.000 |

| 100 | 0.010 | ±0.000 | |

| 1000 | 0.056 | ±0.018 | |

| 10000 | 0.608 | ±0.073 | |

| 100000 | 8.040 | ±0.481 | |

| 1000000 | 83.822 | ±1.920 | |

batch_dependencies |

10 | 0.000 | ±0.000 |

| 100 | 0.002 | ±0.000 | |

| 1000 | 0.016 | ±0.000 | |

| 10000 | 0.176 | ±0.004 | |

| 100000 | 2.597 | ±0.008 | |

| 1000000 | 27.188 | ±0.160 |

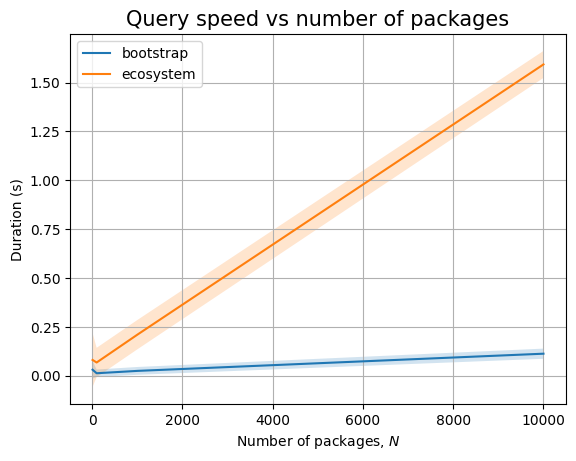

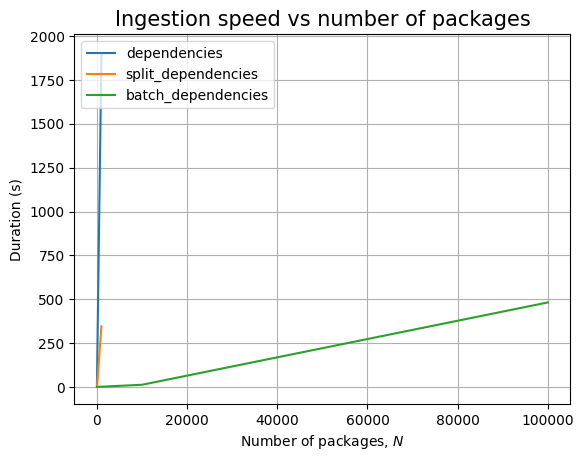

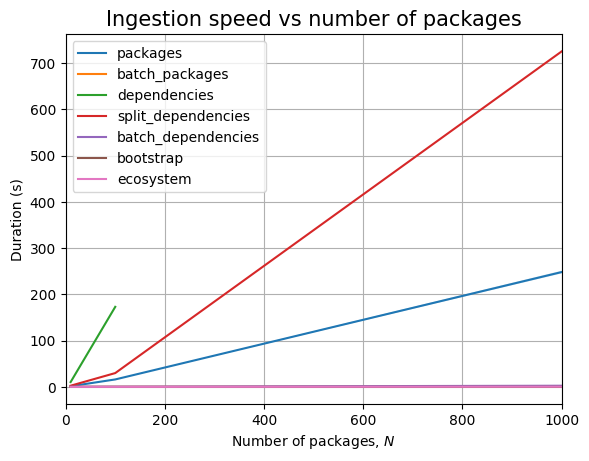

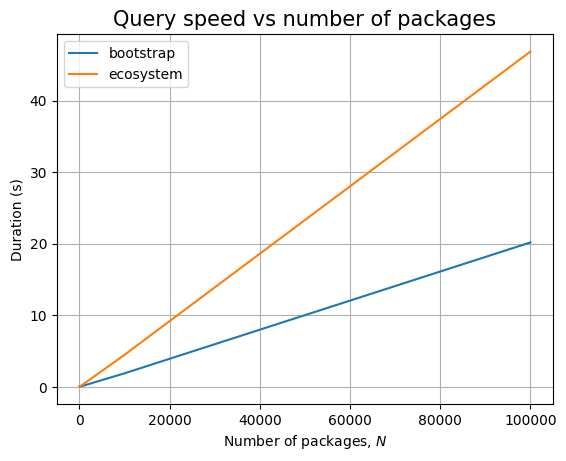

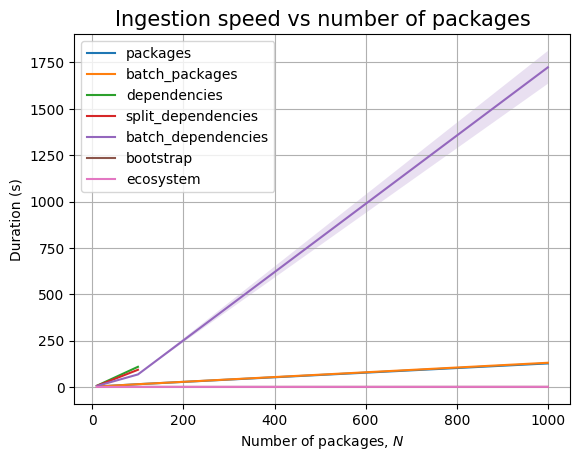

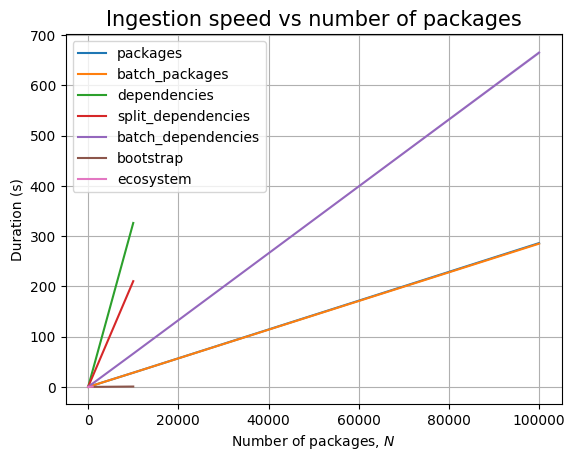

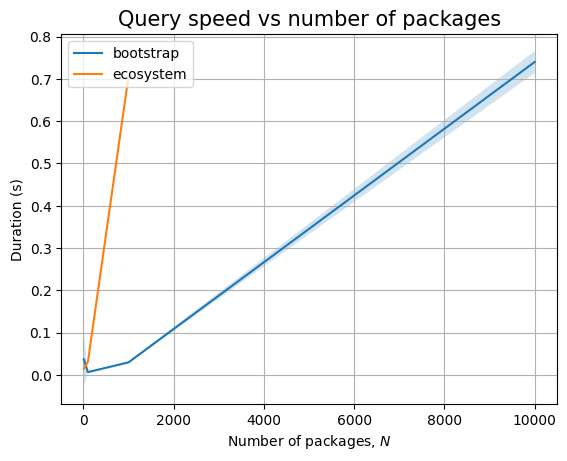

Just like before, time for query execution is 0, since we don’t have a database and we don’t measure the time taken to validate the answers. The timing for the ingestion queries is approximatively linear in \(N\), as can be easily seen when we plot the data:

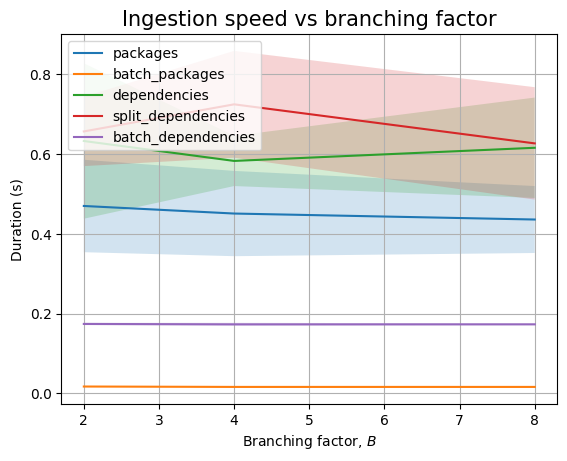

Next, we can look at the effect of \(B\):

| Experiment | B | time (s) | stddev (s) |

|---|---|---|---|

packages |

2 | 0.470 | ±0.116 |

| 4 | 0.451 | ±0.107 | |

| 8 | 0.436 | ±0.084 | |

batch_packages |

2 | 0.017 | ±0.000 |

| 4 | 0.016 | ±0.000 | |

| 8 | 0.016 | ±0.000 | |

dependencies |

2 | 0.633 | ±0.195 |

| 4 | 0.583 | ±0.063 | |

| 8 | 0.616 | ±0.126 | |

split_dependencies |

2 | 0.657 | ±0.087 |

| 4 | 0.725 | ±0.134 | |

| 8 | 0.627 | ±0.141 | |

batch_dependencies |

2 | 0.174 | ±0.003 |

| 4 | 0.173 | ±0.004 | |

| 8 | 0.173 | ±0.002 |

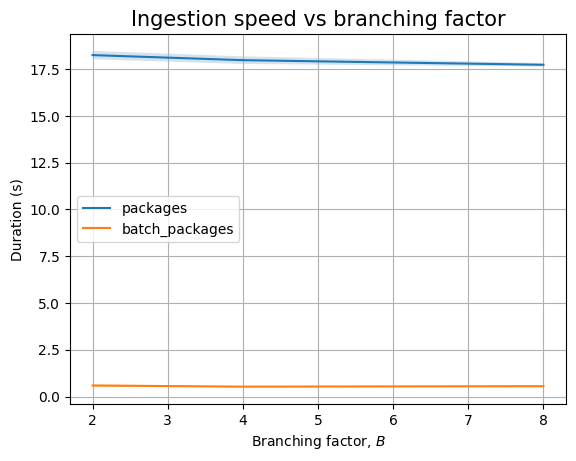

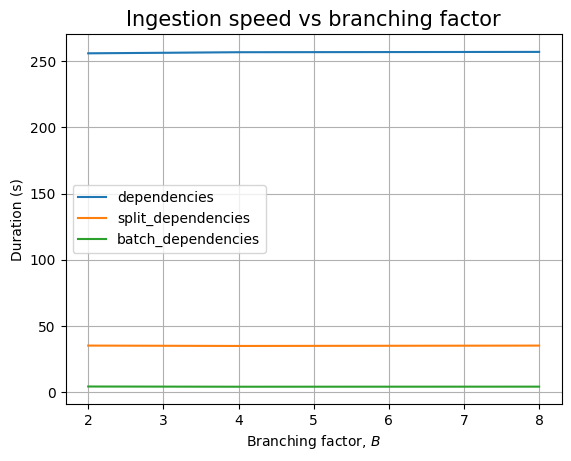

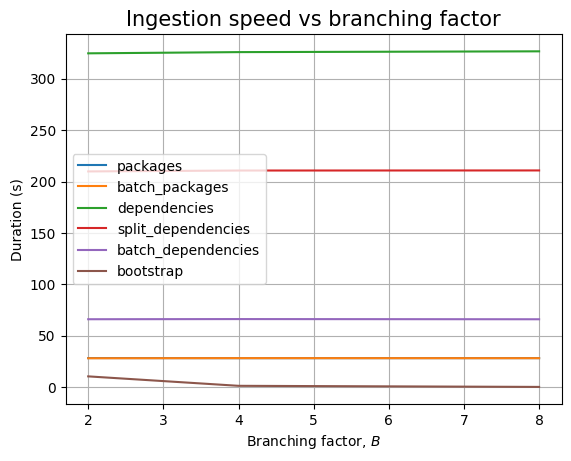

The branching factor \(B\) has no effect in the empty case, as the only thing that changes are the values for the ecosystem, namespace and name fields. We see the similar result when we plot the data:

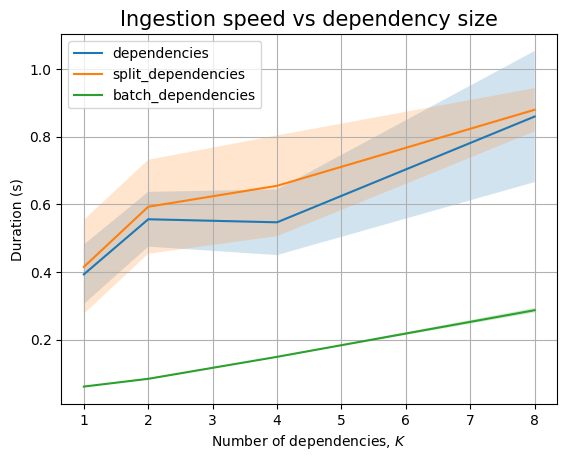

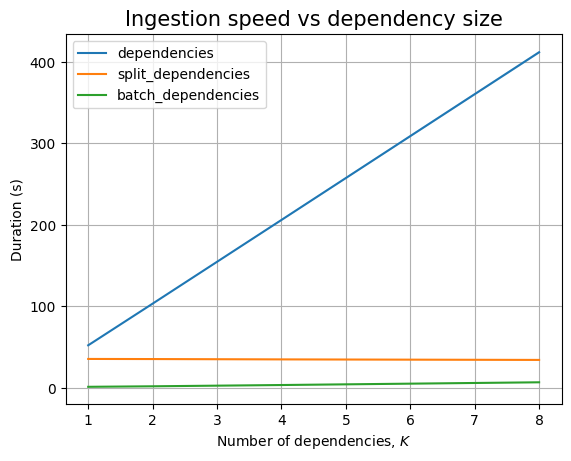

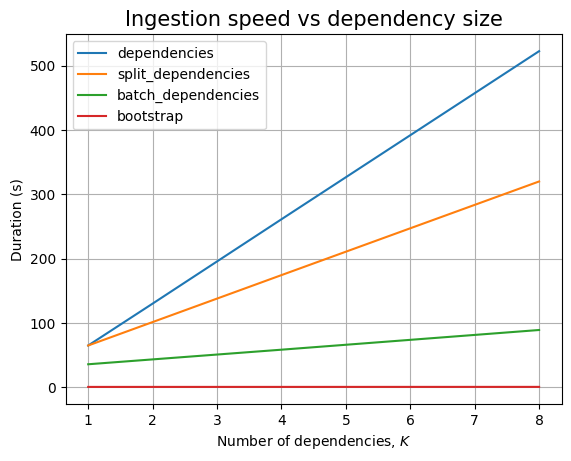

However, we won’t get a similar pattern when looking at the effect of \(K\):

| Experiment | K | time (s) | stddev (s) |

|---|---|---|---|

dependencies |

1 | 0.393 | ±0.088 |

| 2 | 0.556 | ±0.081 | |

| 4 | 0.547 | ±0.097 | |

| 8 | 0.860 | ±0.194 | |

split_dependencies |

1 | 0.415 | ±0.139 |

| 2 | 0.593 | ±0.139 | |

| 4 | 0.655 | ±0.149 | |

| 8 | 0.880 | ±0.064 | |

batch_dependencies |

1 | 0.061 | ±0.001 |

| 2 | 0.084 | ±0.002 | |

| 4 | 0.149 | ±0.001 | |

| 8 | 0.287 | ±0.007 |

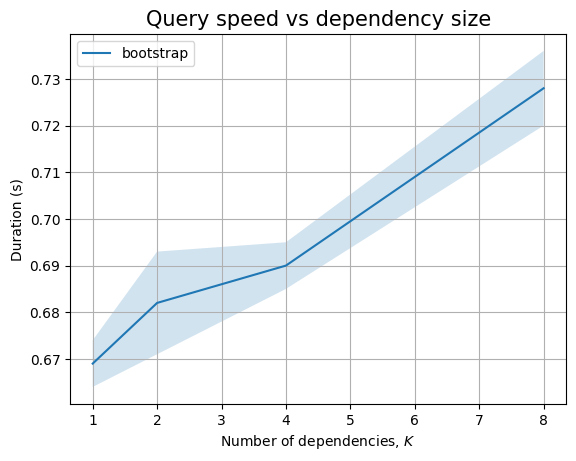

Here, the dependency should be linear. And, we see that, in the limit of the noise, when we plot it:

We don’t need to measure anything for the read-only queries, but we will do so on the next sections, when we actually look at database backends.

Neo4j 🔗

For Neo4j, I am using the docker container, started via the following command:

docker run -p 7474:7474 -p 7687:7687 --name neo4j \

-e NEO4J_AUTH=neo4j/s3cr3tp455 neo4j:latestThen, for the Python driver, given that I am on Nix, I only need to bring up a shell that contains the corresponding packages:

nix-shell -p python311Packages.neo4jI will use this pattern for the other database backends. This ensures that the

Python environment under which the experiments are run is always clean,

containing only the needed packages, without the need to use venv or similar

sandboxing solutions.

The first thing to do here is to subclass the Experiment class to

create the test suite for Neo4j:

class Neo4j(Experiment):

def __init__(self, N: int, B: int, K: int, shuffle: bool = True, threaded: bool = True):

super().__init__(N, B, K, shuffle, threaded)

import neo4j

self._driver = neo4j.GraphDatabase.driver(

"bolt://localhost:7687",

auth=("neo4j", "s3cr3tp455"))

def __del__(self):

self._driver.close()

#...This takes care of creating a database connection when an instance of the class is created and removing it when the instance goes out of scope and Python decides to garbage collect it (which is not deterministic).

On the setup function we need to create indexes if the user asks for

them, but there is also a correctness issue that we have to consider. In

Neo4j, we can create new nodes and relationships using CREATE, and we can

pattern match on what is already present using [OPTIONAL] MATCH, but

sometimes we want to create a new entity in a pattern only if it doesn’t

already exist. For example, in our scenario, when ingesting a new package in

the same ecosystem as a previous package, we would prefer to reuse the

existing ecosystem node. Neo4j makes it possible to reuse existing graph

patterns using MERGE: the node/relationship will be created if it doesn’t

already exist. This has an implication, however, when ingesting packages in

multithreaded environments: all of the threads will start ingesting a package

when there is nothing present in the database, so instead of having one single

root node for the trie we could get up to as many as there are cores

available. Fortunately, due to the way MERGE works, having

the root of the trie present in the database when the database is brought up

solves this issue. This is because Neo4j takes locks on all bound existing

entities in a pattern if there are entities that need to be created to

complete the pattern. Thus, this is how we should set up and tear down the

database:

class Neo4j(Experiment):

#...

def setup(self, indexes: bool = True):

def setup_root_node(tx):

tx.run("MERGE (root:Pkg)")

with self._driver.session(database='neo4j') as session:

session.execute_write(setup_root_node)

if indexes:

session.run("CREATE INDEX I1 FOR (n:Ecosystem) on (n.ecosystem)")

session.run("CREATE INDEX I2 FOR (n:Namespace) on (n.namespace)")

session.run("CREATE INDEX I3 FOR (n:Name) on (n.name)")

session.run("CREATE INDEX I4 FOR (n:Version) on (n.version)")

session.run("CALL db.awaitIndexes")

def _do_clean(self):

with self._driver.session(database='neo4j') as session:

session.run("MATCH (n) DETACH DELETE n")

session.run("DROP INDEX I1 IF EXISTS")

session.run("DROP INDEX I2 IF EXISTS")

session.run("DROP INDEX I3 IF EXISTS")

session.run("DROP INDEX I4 IF EXISTS")

session.run("CALL db.awaitIndexes")

#...We create an index for each type of node that has a scalar property which we

might use in later (sub)queries. We don’t have an index for Dependency

nodes because these don’t have scalar data in this experiment (but do in

GUAC, although even there these are unlikely to be part of a query predicate).

Note that we explicitly pass in the default database name to session(), as

recommended in performance tips page. Over large number of

queries, this should result in a small performance improvement.

Ingesting packages is simple:

class Neo4j(Experiment):

#...

def _do_ingest_package(self, package: Package):

def write(tx):

query = """

MATCH (root:Pkg)

MERGE (root) -[:Ecosystem]-> (ecosystem:Ecosystem {ecosystem: $ecosystem})

MERGE (ecosystem) -[:Namespace]-> (ns:Namespace {namespace: $namespace})

MERGE (ns) -[:Name]-> (name:Name {name: $name})

MERGE (name) -[:Version]-> (version:Version {version: $version})

"""

result = tx.run(query, ecosystem=package.ecosystem,

namespace=package.namespace, name=package.name,

version=package.version)

return result.consume()

with self._driver.session(database='neo4j') as session:

session.execute_write(write)

#...We start the query by matching on the singleton Pkg node. Then we use

MERGE patterns to extend from a node to a child in the graph, creating the

child as needed. We don’t use a single MERGE line that connects everything

at once because then Neo4j will create the entire chain (e.g., create a new

set of ecosystem, namespace, name and version nodes even if only the new

version is missing). This is also the reason why we don’t start with MERGE (root:Pkg) -[:Ecosystem]-> ...: if we were to, then the multithread runtime

will create multiple root nodes again. To summarize, we want each MERGE

line to create at most one new bound variable (for the new trie node if it

didn’t already exist).

Note that we MATCH on the root node instead of using MERGE as the first

word in the query. If we use MERGE, this results in one additional operation

during the query execution, as can be seen via profiling.

I’ll discuss profiling in detail a little bit later in this section, but first we want to look at the batch ingestion process code:

class Neo4j(Experiment):

#...

def _do_ingest_batch_packages(self, packages: Sequence[Package]):

def write(tx):

query = """

UNWIND $packages as pkg

MATCH (root:Pkg)

MERGE (root) -[:Ecosystem]-> (ecosystem:Ecosystem {ecosystem: pkg.ecosystem})

MERGE (ecosystem) -[:Namespace]-> (ns:Namespace {namespace: pkg.namespace})

MERGE (ns) -[:Name]-> (name:Name {name: pkg.name})

MERGE (name) -[:Version]-> (version:Version {version: pkg.version})

"""

result = tx.run(query, packages=[asdict(p) for p in packages])

return result.consume()

with self._driver.session(database='neo4j') as session:

session.execute_write(write)

#...Not much has changed: we just need an additional UNWIND line at the start of

the query to run a loop over the entire batch and then we need to convert the

input from dataclasses to dictionaries.

Next, let’s look at ingesting dependencies too:

class Neo4j(Experiment):

#...

def _do_ingest_dependency(self, dependency: Dependency):

def write(tx):

query = """

MATCH (root:Pkg)

MATCH (root) -[:Ecosystem]-> (:Ecosystem {ecosystem: $ecosystem1})

-[:Namespace]-> (:Namespace {namespace: $namespace1})

-[:Name]-> (:Name {name: $name1})

-[:Version]-> (v1:Version {version: $version1})

MATCH (root) -[:Ecosystem]-> (:Ecosystem {ecosystem: $ecosystem2})

-[:Namespace]-> (:Namespace {namespace: $namespace2})

-[:Name]-> (:Name {name: $name2})

-[:Version]-> (v2:Version {version: $version2})

MERGE (v1) -[:HasDependency]-> (:Dependency) -[:Dependency]-> (v2)

"""

result = tx.run(query, ecosystem1=dependency.parent.ecosystem,

namespace1=dependency.parent.namespace,

name1=dependency.parent.name,

version1=dependency.parent.version,

ecosystem2=dependency.target.ecosystem,

namespace2=dependency.target.namespace,

name2=dependency.target.name,

version2=dependency.target.version)

return result.consume()

with self._driver.session(database='neo4j') as session:

session.execute_write(write)

#...In general, we have to match the 2 pURLs for the two packages. So, even though

in our case the query could just match the version nodes (since the version

contains the full \(x\) for the package), we will write the query assuming the

general case13. We use MATCH statements since we assume that the package

nodes involved in this dependency have been ingested a priori. In this case,

it makes no sense to split the match patterns across multiple statements, each

containing an edge, like we did with MERGE lines when ingesting packages. In

fact, profiling these scenarios show that either query gets compiled to the

same execution tree.

Instead of a MERGE for the dependency node, we could have used CREATE,

since our experiments are only ingesting each dependency once, after which the

dataset is dropped before a new experiment can run. But this won’t translate

to real-world scenario, where multiple documents might record the same

dependency link. Thus we need to make sure we don’t duplicate the dependency

nodes.

Before moving forward, let’s also discuss profiling. It is very simple, all we

have to do is add PROFILE in front of the first word of the query and then

use the result summary object:

def write(tx):

query = """

PROFILE ...(rest of query)...

"""

result = tx.run(query, ...)

return result.consume()

with self._driver.session(database='neo4j') as session:

summary = session.execute_write(write)

print(summary.profile['args']['string-representation'])Using profiling, we can see the impact indexes in Neo4j have on the performance of ingesting dependencies. Each summary prints a table with a textual representation of the execution tree for the query and some statistics about the execution. The UI from the web page displays this in a nicer format, but then we’d have to run the query manually. Instead, we’d like to see how query profiling data changes as the database gets populated, if any change occurs.

Turns out, there is actually a change. When not using indexes, we have the following profile, at any point during ingesting dependencies (due to page width constraints, I’m using an inline style to reduce font size and I’m slightly reformatting the output):

+-------------------------------+----+--------------------------------------------+------+------+------+--------+

| Operator | Id | Details | Est. | Rows | DB | Memory |

| | | | Rows | | Hits | Bytes |

+-------------------------------+----+--------------------------------------------+------+------+------+--------+

| +ProduceResults | 0 | | 0 | 0 | 0 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +EmptyResult | 1 | | 0 | 0 | 0 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Apply | 2 | | 0 | 1 | 0 | |

| |\ +----+--------------------------------------------+------+------+------+--------+

| | +LockingMerge | 3 | CREATE (anon_15:Dependency), | 0 | 1 | 5 | |

| | | | | (v1)-[anon_14:HasDependency]->(anon_15), | | | | |

| | | | | (anon_15)-[anon_16:Dependency]->(v2), | | | | |

| | | | | LOCK(v1, v2) | | | | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Expand(Into) | 4 | (v1)-[anon_14:HasDependency]->(anon_15) | 0 | 0 | 0 | 592 |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Filter | 5 | anon_15:Dependency | 0 | 0 | 0 | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Expand(All) | 6 | (v2)<-[anon_16:Dependency]-(anon_15) | 0 | 0 | 4 | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Argument | 7 | v2, v1 | 0 | 2 | 0 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 8 | v2.version = $version2 AND v2:Version | 0 | 1 | 2 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 9 | (anon_12)-[anon_13:Version]->(v2) | 0 | 1 | 3 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 10 | v1.version = $version1 AND v1:Version | 0 | 1 | 2 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 11 | (anon_5)-[anon_6:Version]->(v1) | 0 | 1 | 3 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 12 | anon_12.name = $name2 AND anon_12:Name | 0 | 1 | 3 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 13 | (anon_10)-[anon_11:Name]->(anon_12) | 0 | 2 | 4 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 14 | anon_5.name = $name1 AND anon_5:Name | 0 | 1 | 3 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 15 | (anon_3)-[anon_4:Name]->(anon_5) | 0 | 2 | 4 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 16 | anon_10.namespace = $namespace2 AND | 0 | 1 | 2 | |

| | | | anon_10:Namespace | | | | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 17 | (anon_8)-[anon_9:Namespace]->(anon_10) | 0 | 1 | 3 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 18 | anon_8.ecosystem = $ecosystem2 AND | 0 | 1 | 2 | |

| | | | anon_8:Ecosystem | | | | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 19 | (root)-[anon_7:Ecosystem]->(anon_8) | 0 | 1 | 2 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 20 | anon_3.namespace = $namespace1 AND | 0 | 1 | 2 | |

| | | | anon_3:Namespace | | | | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 21 | (anon_1)-[anon_2:Namespace]->(anon_3) | 0 | 1 | 3 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 22 | anon_1.ecosystem = $ecosystem1 AND | 0 | 1 | 3 | |

| | | | root:Pkg AND anon_1:Ecosystem | | | | |

| | +----+--------------------------------------------+------+------+------+--------+

| +DirectedRelationshipTypeScan | 23 | (root)-[anon_0:Ecosystem]->(anon_1) | 3 | 1 | 2 | |

+-------------------------------+----+--------------------------------------------+------+------+------+--------+To understand this table, we can find details about each operator on the

operator summary index that Neo4j offers. There

isn’t much to see here. Starting from the root node (operation 23, read the

table from bottom up), Neo4j matches each pattern in order, having very few

rows to return to the operator upwards, very few database hits. This goes

upwards until operator 8, at which point Neo4j has the 2 variables v1 and

v2 for the version nodes that need to be linked into the dependency

structure. Now, it needs to evaluate another branch of the evaluation tree,

where operator 4 finally builds the edge, writing nearly 600 bytes. The edge

is created on operator 3, and we don’t return any result back.

When using indexes, the first few ingestions in the database have the following profile (with similar modifications as above):

+------------------------------+----+--------------------------------------------+------+------+------+--------+

| Operator | Id | Details | Est. | Rows | DB | Memory |

| | | | Rows | | Hits | Bytes |

+------------------------------+----+--------------------------------------------+------+------+------+--------+

| +ProduceResults | 0 | | 0 | 0 | 0 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +EmptyResult | 1 | | 0 | 0 | 0 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Apply | 2 | | 0 | 1 | 0 | |

| |\ +----+--------------------------------------------+------+------+------+--------+

| | +LockingMerge | 3 | CREATE (anon_15:Dependency), | 0 | 1 | 5 | |

| | | | | (v1)-[anon_14:HasDependency]->(anon_15), | | | | |

| | | | | (anon_15)-[anon_16:Dependency]->(v2), | | | | |

| | | | | LOCK(v1, v2) | | | | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Expand(Into) | 4 | (v1)-[anon_14:HasDependency]->(anon_15) | 0 | 0 | 0 | 592 |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Filter | 5 | anon_15:Dependency | 0 | 0 | 0 | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Expand(All) | 6 | (v2)<-[anon_16:Dependency]-(anon_15) | 0 | 0 | 4 | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Argument | 7 | v2, v1 | 0 | 2 | 0 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +NodeHashJoin | 8 | anon_12 | 0 | 1 | 0 | 552 |

| |\ +----+--------------------------------------------+------+------+------+--------+

| | +NodeHashJoin | 9 | anon_5 | 0 | 1 | 0 | 488 |

| | |\ +----+--------------------------------------------+------+------+------+--------+

| | | +NodeHashJoin | 10 | anon_10 | 0 | 1 | 0 | 624 |

| | | |\ +----+--------------------------------------------+------+------+------+--------+

| | | | +NodeHashJoin | 11 | anon_3 | 0 | 1 | 0 | 552 |

| | | | |\ +----+--------------------------------------------+------+------+------+--------+

| | | | | +NodeHashJoin | 12 | anon_8 | 0 | 1 | 0 | 624 |

| | | | | |\ +----+--------------------------------------------+------+------+------+--------+

| | | | | | +NodeHashJoin | 13 | root | 0 | 1 | 0 | 568 |

| | | | | | |\ +----+--------------------------------------------+------+------+------+--------+

| | | | | | | +NodeHashJoin | 14 | anon_1 | 0 | 1 | 0 | 488 |

| | | | | | | |\ +----+--------------------------------------------+------+------+------+--------+

| | | | | | | | +Filter | 15 | cache[anon_3.namespace] = $namespace1 AND | 0 | 1 | 2 | |

| | | | | | | | | | | anon_3:Namespace | | | | |

| | | | | | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | | | | | +Expand(All) | 16 | (anon_1)-[anon_2:Namespace]->(anon_3) | 0 | 1 | 3 | |

| | | | | | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | | | | | +NodeIndexSeek | 17 | RANGE INDEX anon_1:Ecosystem(ecosystem) | 0 | 1 | 2 | |

| | | | | | | | | | WHERE ecosystem = $ecosystem1 | | | | |

| | | | | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | | | | +Filter | 18 | root:Pkg | 0 | 1 | 1 | |

| | | | | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | | | | +Expand(All) | 19 | (anon_1)<-[anon_0:Ecosystem]-(root) | 0 | 1 | 3 | |

| | | | | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | | | | +NodeIndexSeek | 20 | RANGE INDEX anon_1:Ecosystem(ecosystem) | 0 | 1 | 2 | |

| | | | | | | | | WHERE ecosystem = $ecosystem1 | | | | |

| | | | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | | | +Filter | 21 | root:Pkg | 0 | 1 | 1 | |

| | | | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | | | +Expand(All) | 22 | (anon_8)<-[anon_7:Ecosystem]-(root) | 0 | 1 | 3 | |

| | | | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | | | +NodeIndexSeek | 23 | RANGE INDEX anon_8:Ecosystem(ecosystem) | 0 | 1 | 2 | |

| | | | | | | | WHERE ecosystem = $ecosystem2, | | | | |

| | | | | | | | cache[anon_8.ecosystem] | | | | |

| | | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | | +Filter | 24 | cache[anon_8.ecosystem] = $ecosystem2 AND | 0 | 1 | 2 | |

| | | | | | | | anon_8:Ecosystem | | | | |

| | | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | | +Expand(All) | 25 | (anon_10)<-[anon_9:Namespace]-(anon_8) | 0 | 1 | 4 | |

| | | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | | +NodeIndexSeek | 26 | RANGE INDEX anon_10:Namespace(namespace) | 0 | 1 | 2 | |

| | | | | | | WHERE namespace = $namespace2, | | | | |

| | | | | | | cache[anon_10.namespace] | | | | |

| | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | +Filter | 27 | cache[anon_3.namespace] = $namespace1 AND | 0 | 1 | 2 | |

| | | | | | | anon_3:Namespace | | | | |

| | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | +Expand(All) | 28 | (anon_5)<-[anon_4:Name]-(anon_3) | 0 | 1 | 3 | |

| | | | | +----+--------------------------------------------+------+------+------+--------+

| | | | +NodeIndexSeek | 29 | RANGE INDEX anon_5:Name(name) | 0 | 1 | 2 | |

| | | | | | WHERE name = $name1 | | | | |

| | | | +----+--------------------------------------------+------+------+------+--------+

| | | +Filter | 30 | cache[anon_10.namespace] = $namespace2 AND | 0 | 1 | 2 | |

| | | | | | anon_10:Namespace | | | | |

| | | | +----+--------------------------------------------+------+------+------+--------+

| | | +Expand(All) | 31 | (anon_12)<-[anon_11:Name]-(anon_10) | 0 | 1 | 3 | |

| | | | +----+--------------------------------------------+------+------+------+--------+

| | | +NodeIndexSeek | 32 | RANGE INDEX anon_12:Name(name) | 0 | 1 | 2 | |

| | | | | WHERE name = $name2, cache[anon_12.name] | | | | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Filter | 33 | v1.version = $version1 AND v1:Version | 0 | 1 | 2 | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Expand(All) | 34 | (anon_5)-[anon_6:Version]->(v1) | 0 | 1 | 3 | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +NodeIndexSeek | 35 | RANGE INDEX anon_5:Name(name) WHERE | 0 | 1 | 2 | |

| | | | WHERE name = $name1 | | | | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 36 | cache[anon_12.name] = $name2 AND | 0 | 1 | 2 | |

| | | | anon_12:Name | | | | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 37 | (v2)<-[anon_13:Version]-(anon_12) | 0 | 1 | 2 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +NodeIndexSeek | 38 | RANGE INDEX v2:Version(version) | 0 | 1 | 2 | |

| | | WHERE version = $version2 | | | | |

+------------------------------+----+--------------------------------------------+------+------+------+--------+This time we see multiple branches where a range index is used to find a node, and put it in cache. There are several hashed join operations, which are eager (they need to see all of the data from below before producing upstream). This is why these nodes need to reserve memory. In the end, we have a wider but shallower tree.

However, when we get past a certain point in the ingestion process, the profile changes:

+------------------------------+----+--------------------------------------------+------+------+------+--------+

| Operator | Id | Details | Est. | Rows | DB | Memory |

| | | | Rows | | Hits | Bytes |

+------------------------------+----+--------------------------------------------+------+------+------+--------+

| +ProduceResults | 0 | | 0 | 0 | 0 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +EmptyResult | 1 | | 0 | 0 | 0 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Apply | 2 | | 0 | 1 | 0 | |

| |\ +----+--------------------------------------------+------+------+------+--------+

| | +LockingMerge | 3 | CREATE (anon_15:Dependency), | 0 | 1 | 5 | |

| | | | | (v1)-[anon_14:HasDependency]->(anon_15), | | | | |

| | | | | (anon_15)-[anon_16:Dependency]->(v2), | | | | |

| | | | | LOCK(v1, v2) | | | | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Expand(Into) | 4 | (v1)-[anon_14:HasDependency]->(anon_15) | 0 | 0 | 0 | 592 |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Filter | 5 | anon_15:Dependency | 0 | 0 | 0 | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Expand(All) | 6 | (v2)<-[anon_16:Dependency]-(anon_15) | 0 | 0 | 4 | |

| | | +----+--------------------------------------------+------+------+------+--------+

| | +Argument | 7 | v2, v1 | 0 | 2 | 0 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 8 | v2.version = $version2 AND v2:Version | 0 | 1 | 126 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 9 | (anon_12)-[anon_13:Version]->(v2) | 0 | 125 | 128 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 10 | v1.version = $version1 AND v1:Version | 0 | 1 | 126 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 11 | (anon_5)-[anon_6:Version]->(v1) | 0 | 125 | 128 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 12 | anon_12.name = $name2 AND anon_12:Name | 0 | 1 | 3 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 13 | (anon_10)-[anon_11:Name]->(anon_12) | 0 | 2 | 4 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 14 | anon_5.name = $name1 AND anon_5:Name | 0 | 1 | 3 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 15 | (anon_3)-[anon_4:Name]->(anon_5) | 0 | 2 | 4 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 16 | anon_10.namespace = $namespace2 AND | 0 | 1 | 3 | |

| | | | anon_10:Namespace | | | | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 17 | (anon_8)-[anon_9:Namespace]->(anon_10) | 0 | 2 | 4 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 18 | anon_3.namespace = $namespace1 AND | 0 | 1 | 3 | |

| | | | anon_3:Namespace | | | | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 19 | (anon_1)-[anon_2:Namespace]->(anon_3) | 0 | 2 | 4 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Filter | 22 | root:Pkg | 0 | 1 | 1 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +Expand(All) | 23 | (anon_1)<-[anon_0:Ecosystem]-(root) | 0 | 1 | 4 | |

| | +----+--------------------------------------------+------+------+------+--------+

| +NodeIndexSeek | 24 | RANGE INDEX anon_1:Ecosystem(ecosystem) | 1 | 1 | 2 | |

| | | WHERE ecosystem = $ecosystem1 | | | | |

+------------------------------+----+--------------------------------------------+------+------+------+--------+This looks almost similar to the case when no index is being used! But, on a careful read, we see that actually this execution starts from the index of an ecosystem node, instead of scanning all relationships between root and ecosystems. Since these profiles were obtained with \(B = 2\), there is not much difference to see between the cases. We do see some difference on operations 8-11, which are due to the large number of versions (\(N=1000\) means each package name has an average of \(\frac{N}{B^3} \approx 127\) versions). The query analyzer identified that most indexes are useless for this scenario! This happens even when using \(B=10\), once the number of accesses to the databases using the plan that uses all indexes grows above a certain threshold, the optimizer switches to the new one.

Let’s move on. The next item to implement is batch ingestion of dependencies.

Just like batch ingestion of packages, this is trivial: just add the UNWIND

prefix and do the mechanical transformations:

class Neo4j(Experiment):

#...

def _do_ingest_batch_dependencies(self, dependencies: Sequence[Dependency]):

def write(tx):

query = """

UNWIND $dependencies as dep

MATCH (root:Pkg)

MATCH (root) -[:Ecosystem]-> (:Ecosystem {ecosystem: dep.parent.ecosystem})

-[:Namespace]-> (:Namespace {namespace: dep.parent.namespace})

-[:Name]-> (:Name {name: dep.parent.name})

-[:Version]-> (v1:Version {version: dep.parent.version})

MATCH (root) -[:Ecosystem]-> (:Ecosystem {ecosystem: dep.target.ecosystem})

-[:Namespace]-> (:Namespace {namespace: dep.target.namespace})

-[:Name]-> (:Name {name: dep.target.name})

-[:Version]-> (v2:Version {version: dep.target.version})

MERGE (v1) -[:HasDependency]-> (:Dependency) -[:Dependency]-> (v2)

"""

result = tx.run(query, dependencies=[asdict(d) for d in dependencies])

return result.consume()

with self._driver.session(database='neo4j') as session:

session.execute_write(write)

#...Neo4j supports nested dictionaries, so the transformations are trivial.

It is time to move to read-only queries. Here, instead of returning the entire pURL of a package, we will only return the version node (that is, the value \(x\)). In GUAC the queries are slightly longer as we want to return the full trie path to fill the GraphQL result. There is a possibility to retrieve the version node and then run a separate query to retrieve the pURL. Benchmarking which of the two is faster is out of scope for this article, but might be considered in a follow-up.

For packages needing bootstrap (or invalid dependencies on the same package), we just need to identify diamond patterns like:

This pattern is easily representable in a query:

class Neo4j(Experiment):

#...

def _do_query_bootstrap(self):

def read(tx):

pairs = []

query = """

MATCH (a:Version) <-[:Version]- (:Name) -[:Version]-> (b:Version)

-[:HasDependency]-> (:Dependency) -[:Dependency]-> (a)

RETURN b.version AS parent, a.version AS target

"""

result = tx.run(query)

for record in result:

pairs.append((record['parent'], record['target']))

return pairs

with self._driver.session(database='neo4j') as session:

result = session.execute_read(read)

return result

#...Since the read queries return results, profiling is slightly different:

def read(tx):

query = """

PROFILE ...(rest of query)...

"""

result = tx.run(query)

# process the result

# return result.consume() for the summary

return data, result.consume()

with self._driver.session(database='neo4j') as session:

result, summary = session.execute_read(read)

print(summary.profile['args']['string-representation'])Finding packages that cross ecosystems is also easy once we look at the graph pattern:

We need to match on 2 ecosystem nodes, check that they are different, then expand to version and dependency nodes:

class Neo4j(Experiment):

#...

def _do_query_ecosystem(self):

def read(tx):

pairs = []

query = """

MATCH (e1:Ecosystem)

MATCH (e2:Ecosystem)

WHERE e1.ecosystem <> e2.ecosystem

MATCH (e1) -[:Namespace]-> (:Namespace) -[:Name]-> (:Name) -[:Version]-> (v1:Version)

MATCH (e2) -[:Namespace]-> (:Namespace) -[:Name]-> (:Name) -[:Version]-> (v2:Version)

MATCH (v1) -[:HasDependency]-> (:Dependency) -[:Dependency]-> (v2)

RETURN v1.version AS parent, v2.version AS target

"""

result = tx.run(query)

for record in result:

pairs.append((record['parent'], record['target']))

return pairs

with self._driver.session(database='neo4j') as session:

result = session.execute_read(read)

return resultWe already know that we can combine the 3 MATCH lines into a single one,

with a wider pattern and no performance implication:

query = """

MATCH (e1:Ecosystem)

MATCH (e2:Ecosystem)

WHERE e1.ecosystem <> e2.ecosystem

MATCH (e1) -[:Namespace]-> (:Namespace) -[:Name]-> (:Name) -[:Version]->

(v1:Version) -[:HasDependency]-> (:Dependency) -[:Dependency]-> (v2:Version)

<-[:Version]- (:Name) <-[:Name]- (:Namespace) <-[:Namespace]-(e2)

RETURN v1.version AS parent, v2.version AS target

"""Turns out that it is possible to include the ecosystem matching in the same pattern, without any significant difference in profiling:

query = """

PROFILE MATCH (e1:Ecosystem) -[:Namespace]-> (:Namespace)

-[:Name]-> (:Name)

-[:Version]-> (v1:Version)

-[:HasDependency]-> () -[:Dependency]-> (v2:Version)

<-[:Version]- (:Name)

<-[:Name]- (:Namespace)

<-[:Namespace]-(e2:Ecosystem)

WHERE e1.ecosystem <> e2.ecosystem

RETURN v1.version AS parent, v2.version AS target

"""We’ll use the first version as it looks the most readable of all, at no performance cost.

Before continuing to the benchmarking results, I’d like to say that the Python driver for Neo4j has support for custom presentation formats for the query data, so that users of the library don’t need to replicate the same code. In a future article when looking at path queries, I might decide to use these.

And now, it is time to look at the results:

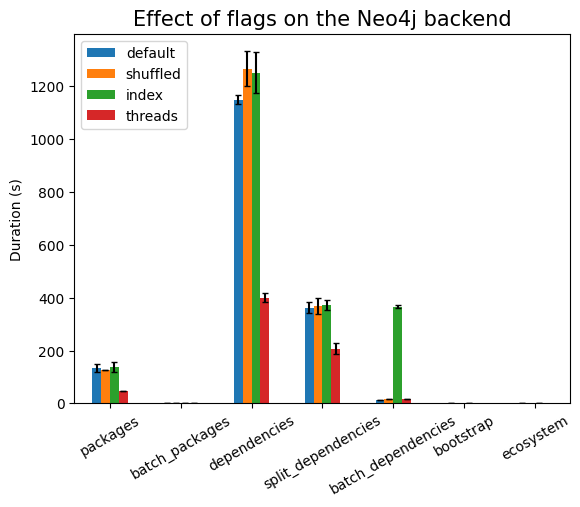

| Experiment | type | time (s) | stddev (s) |

|---|---|---|---|

packages |

134.540 | ±14.692 | |

| shuffled | 125.821 | ±0.549 | |

| threads | 47.136 | ±0.259 | |

| index | 138.285 | ±19.998 | |

batch_packages |

1.189 | ±0.239 | |

| shuffled | 1.014 | ±0.038 | |

| threads | 1.041 | ±0.044 | |

| index | 1.260 | ±0.100 | |

dependencies |

1149.795 | ±16.339 | |

| shuffled | 1266.392 | ±65.738 | |

| threads | 399.951 | ±17.468 | |

| index | 1251.333 | ±77.973 | |

split_dependencies |

362.864 | ±21.274 | |

| shuffled | 368.909 | ±30.491 | |

| threads | 206.702 | ±20.790 | |

| index | 372.788 | ±19.062 | |

batch_dependencies |

14.635 | ±0.209 | |

| shuffled | 15.409 | ±0.140 | |

| threads | 15.594 | ±0.128 | |

| index | 365.816 | ±6.122 | |

bootstrap |

0.123 | ±0.032 | |

| index | 0.113 | ±0.026 | |

ecosystem |

1.475 | ±0.037 | |

| index | 1.593 | ±0.069 |

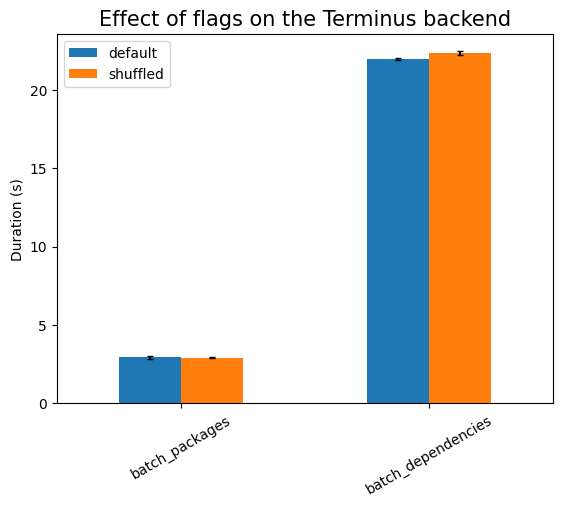

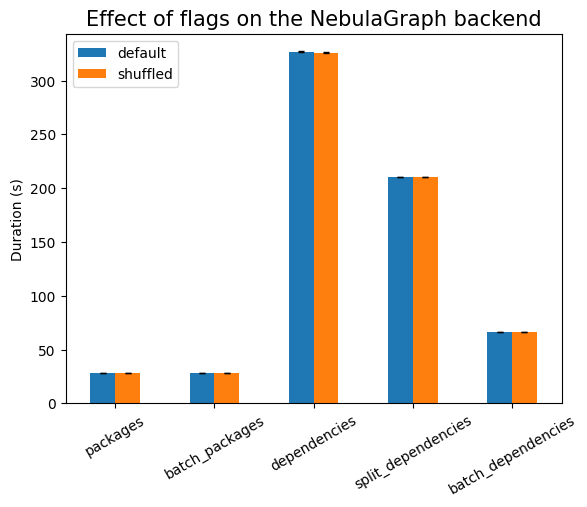

We see some surprising effects here. Using indexes does not work well when we

do batch ingestion. The biggest difference is visible in the case of

batch_dependencies, as can be seen in the following plot:

This is explained by the fact that there is work to ensure consistency of the indexes that needs to be done, and in some ingestion scenarios it is better to drop the index, do the bulk ingestion, and then index again. I might run such experiments in a future article, but for now (since data is already generated by the time I’m writing on these sections) we’ll continue with this data.

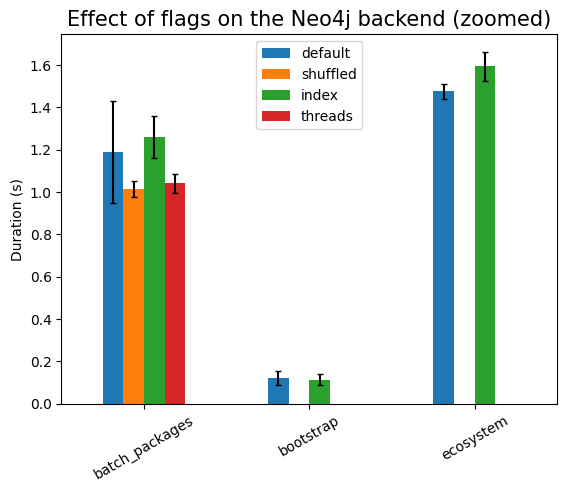

Note, though, that the index is not very effective in increasing the performance of the read queries, as can be seen when we zoom to the corresponding columns:

Next, we need to look at the performance of ingestion when the number of packages increases:

| Experiment | N | time (s) | stddev (s) |

|---|---|---|---|

packages |

10 | 0.280 | ±0.041 |

| 100 | 0.806 | ±0.026 | |

| 1000 | 5.148 | ±0.190 | |

| 10000 | 47.136 | ±0.259 | |

| 100000 | 512.069 | ±17.396 | |

batch_packages |

10 | 0.097 | ±0.018 |

| 100 | 0.086 | ±0.009 | |

| 1000 | 0.184 | ±0.008 | |

| 10000 | 1.189 | ±0.239 | |

| 100000 | 12.026 | ±0.100 | |

dependencies |

10 | 0.878 | ±0.087 |

| 100 | 4.938 | ±0.079 | |

| 1000 | 41.392 | ±0.686 | |

| 10000 | 399.951 | ±17.468 | |

split_dependencies |

10 | 1.726 | ±0.212 |

| 100 | 4.344 | ±0.960 | |

| 1000 | 21.761 | ±0.553 | |

| 10000 | 206.702 | ±20.790 | |

batch_dependencies |

10 | 0.335 | ±0.034 |

| 100 | 0.561 | ±0.035 | |

| 1000 | 4.283 | ±0.032 | |

| 10000 | 14.635 | ±0.209 | |

bootstrap |

10 | 0.031 | ±0.045 |

| 100 | 0.013 | ±0.019 | |

| 1000 | 0.025 | ±0.020 | |

| 10000 | 0.113 | ±0.026 | |

ecosystem |

10 | 0.081 | ±0.138 |

| 100 | 0.068 | ±0.075 | |

| 1000 | 0.210 | ±0.075 | |

| 10000 | 1.593 | ±0.069 |

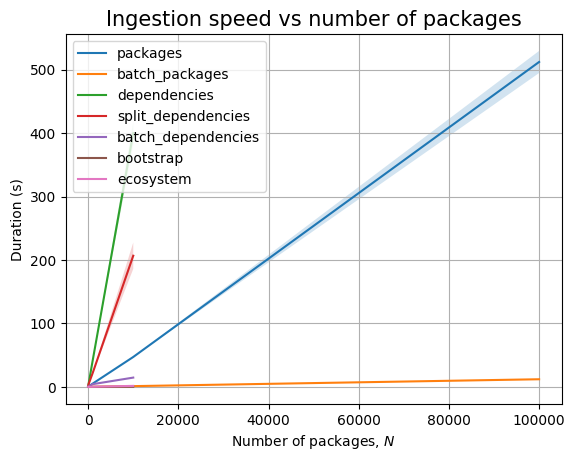

We are unable to ingest more than 100k packages within the limits imposed. In fact, for dependencies, we cannot even go above 10k!

Increasing \(N\) makes JVM throw out of memory errors when ingesting batches above 100k packages.

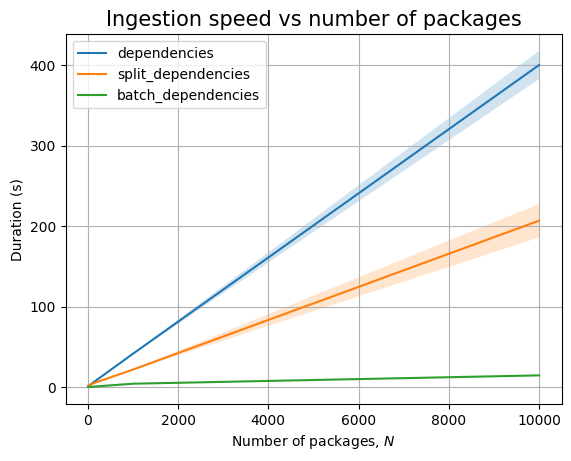

We cannot ingest 100k dependencies in at most 30 minutes, the slope of the ingestion timing graph is significantly high:

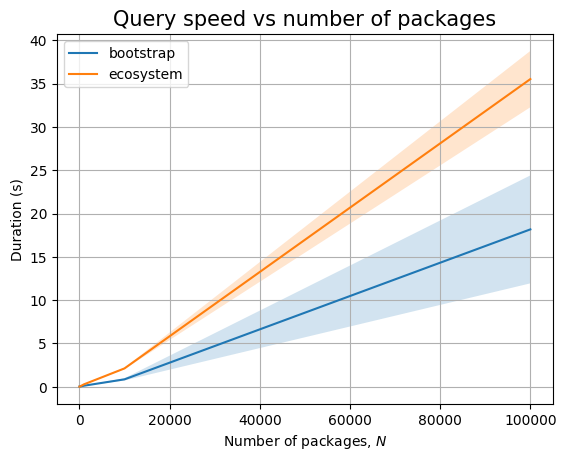

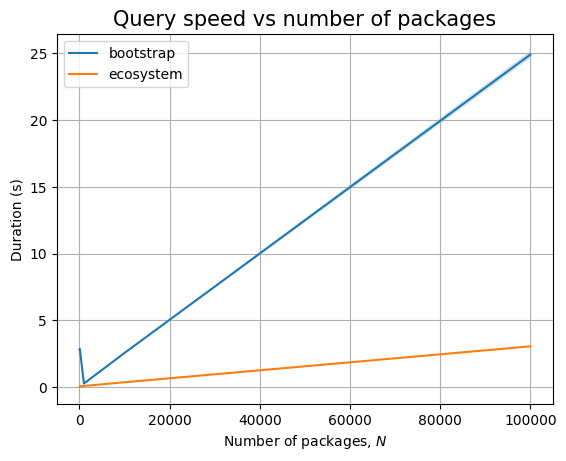

Thus, we can only run the read queries up to 10k packages, although we are able to ingest 100k packages. Because we don’t get dependency data for the remaining 90k packages, we won’t run queries above 10k:

The bootstrap patterns are simple, involving only 4 nodes. So the query is running very fast. The query that compares across ecosystem nodes is slow, even in the presence of indexes. This is probably because we still end up traversing large parts of the graph.

In any case, both ingestion and querying look to be linear in the database size.

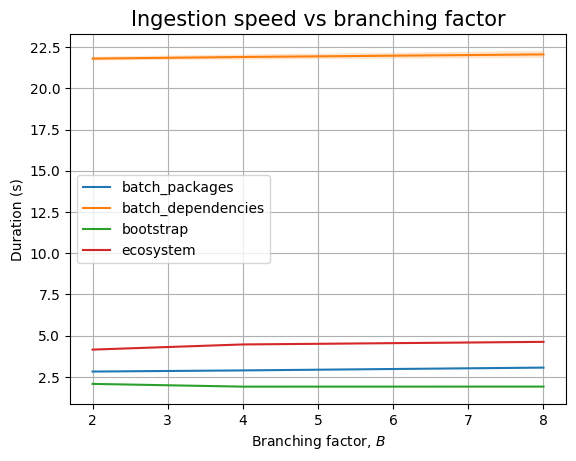

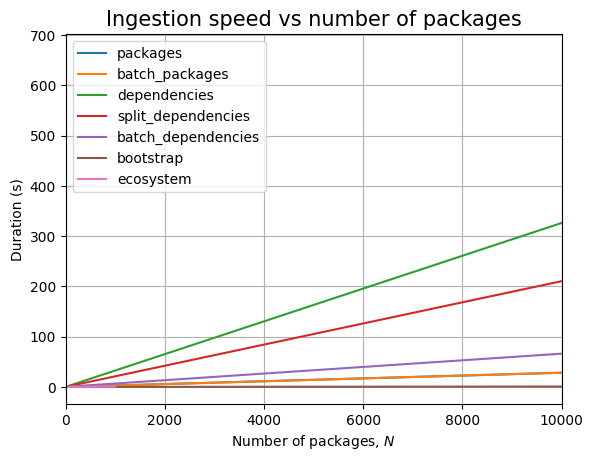

Looking at the branching factor, it looks like there is some complicated relationship between ingestion time and \(B\):

| Experiment | B | time (s) | stddev (s) |

|---|---|---|---|

packages |

2 | 58.210 | ±0.614 |

| 4 | 52.356 | ±4.521 | |

| 8 | 47.117 | ±0.924 | |

batch_packages |

2 | 0.930 | ±0.196 |

| 4 | 0.975 | ±0.021 | |

| 8 | 1.612 | ±0.008 | |

dependencies |

2 | 415.614 | ±19.497 |

| 4 | 408.086 | ±16.535 | |

| 8 | 399.377 | ±25.351 | |

split_dependencies |

2 | 241.499 | ±9.845 |

| 4 | 200.106 | ±15.192 | |

| 8 | 188.399 | ±9.147 | |

batch_dependencies |

2 | 245.788 | ±61.115 |

| 4 | 200.918 | ±89.303 | |

| 8 | 180.162 | ±84.715 | |

bootstrap |

2 | 0.340 | ±0.014 |

| 4 | 0.104 | ±0.008 | |

| 8 | 0.095 | ±0.008 | |

ecosystem |

2 | 1.010 | ±0.074 |

| 4 | 1.455 | ±0.063 | |

| 8 | 1.743 | ±0.061 |

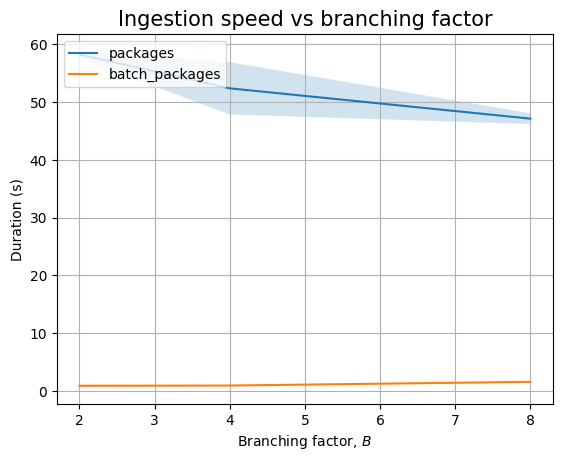

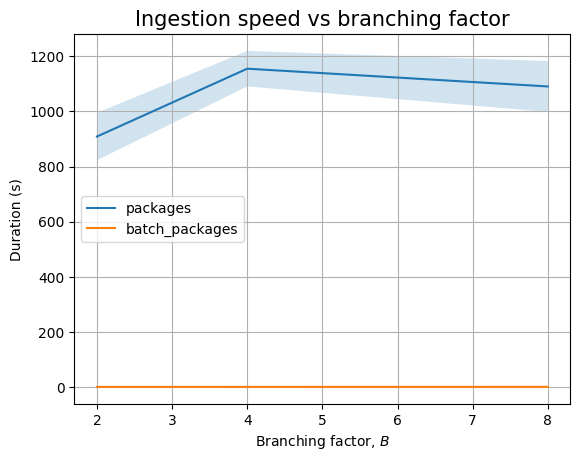

If we look at packages only we see that batch ingestion increases with \(B\), whereas ingesting packages one by one results in a decrease in the ingestion time, even including the measurement noise. As \(B\) increases while \(N\) is kept constant, there are fewer internal nodes that already exist when ingesting new packages, so there is less locking that needs to happen:

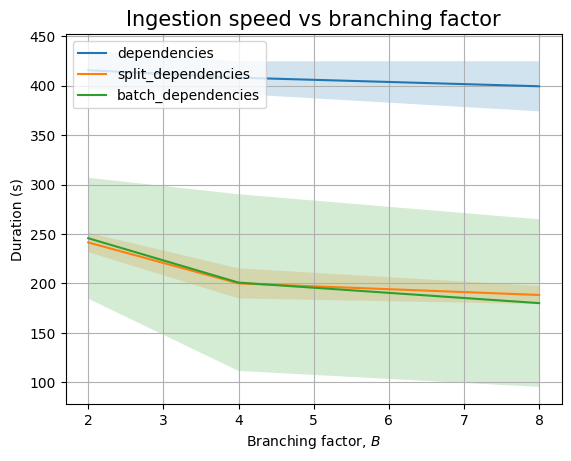

If we look at dependencies only we see a similar decreasing trend, though mostly hidden by the noise:

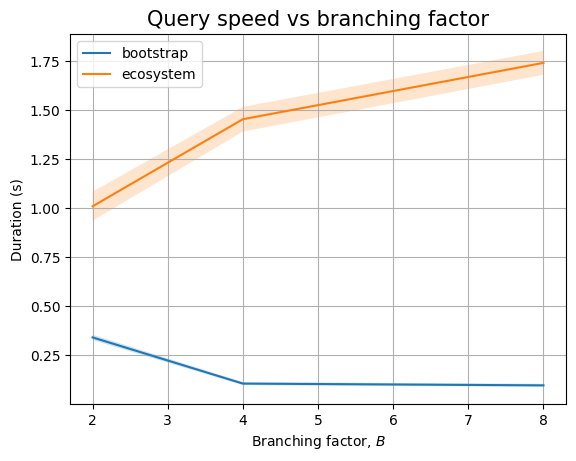

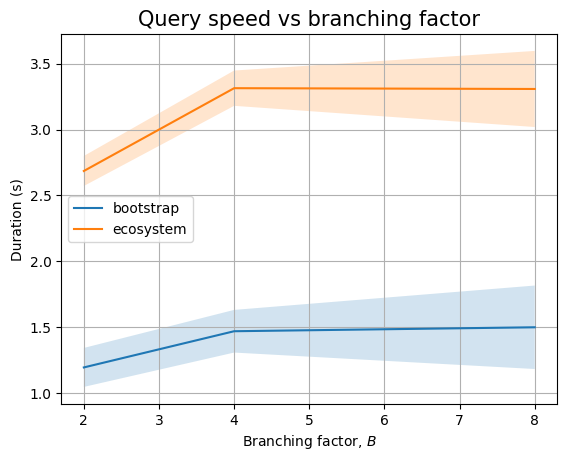

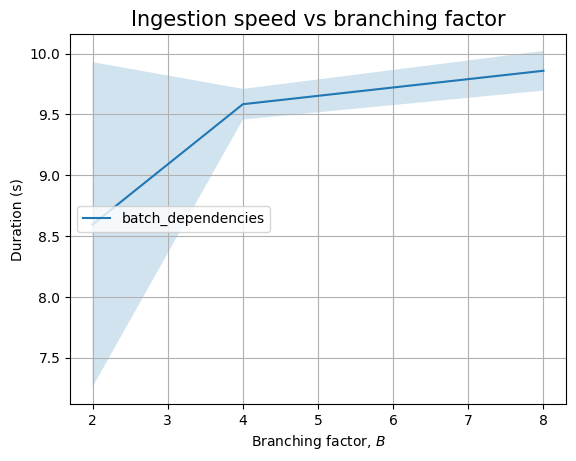

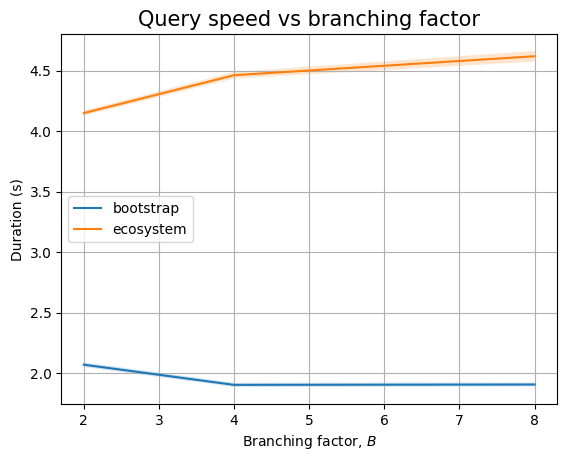

And, if we zoom in to the queries we see two different trends. Queries that only look at package names seem to perform in near constant time (assuming \(B=2\) measurement is severely impacted by biased noise), whereas queries that need to look at internal nodes will have an increasing trend. This could be explained by the fact that there are more edges to look at (proportional to \(B\)):

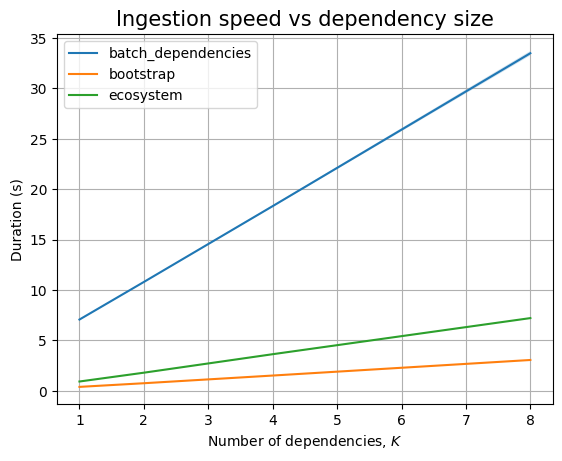

Next, we can look at \(K\):

| Experiment | K | time (s) | stddev (s) |

|---|---|---|---|

dependencies |

1 | 127.698 | ±1.517 |

| 2 | 203.142 | ±2.851 | |

| 4 | 344.805 | ±14.108 | |

| 8 | 599.730 | ±22.192 | |

split_dependencies |

1 | 107.059 | ±0.750 |

| 2 | 130.092 | ±0.700 | |

| 4 | 186.104 | ±12.937 | |

| 8 | 273.566 | ±10.372 | |

batch_dependencies |

1 | 46.523 | ±0.347 |

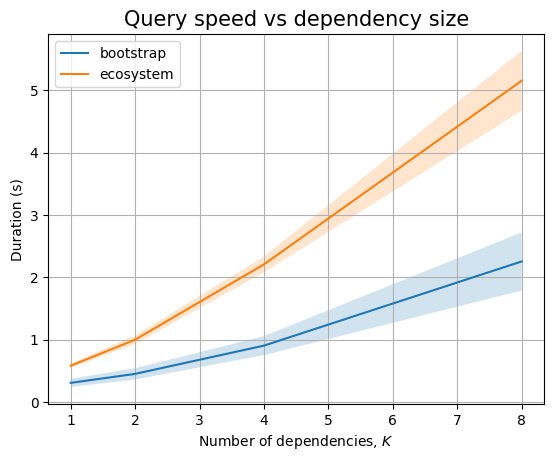

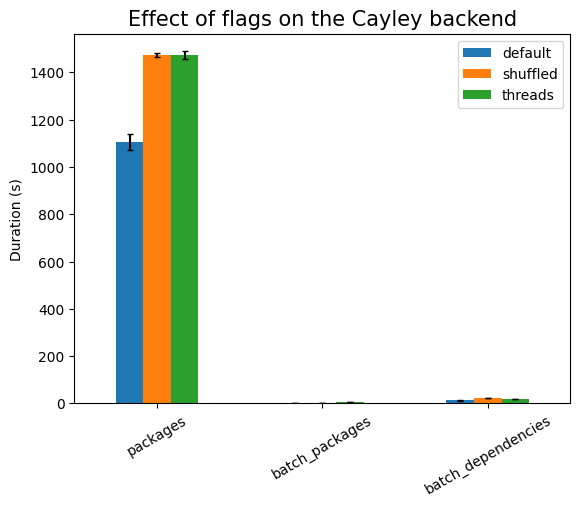

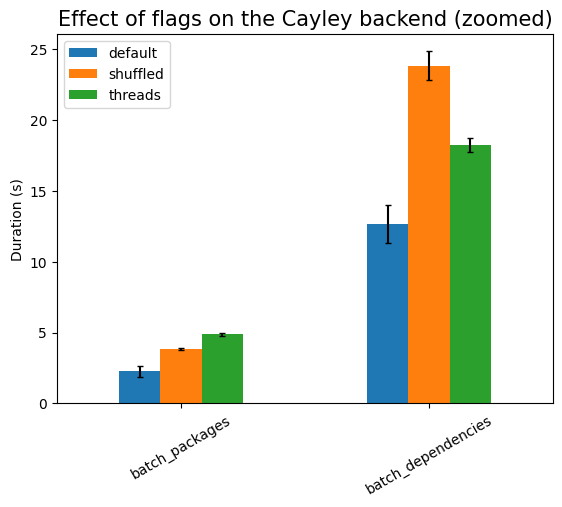

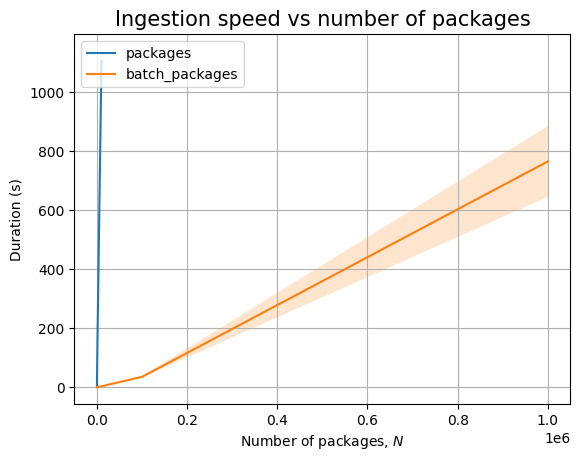

| 2 | 90.909 | ±0.941 | |