Using AI to add support for diagrams

In the last section of the previous article I presented a

limit that was given as an exercise in Visual Differential

Geometry and Forms: A Mathematical Drama in Five Acts

by Tristan

Needham. While trying to compute it, I realized I needed to draw some

diagrams and plots of functions – something which I also need for future

posts too.

There is a trivial way to do that: open an external application, create the diagram there, save it as an image, load it in the post’s input – in Markdown. But there is a more ergonomic approach where the diagram is also built from code, where I don’t need to leave Vim.

Another reason for the timing of this article is the recent Google announcement that Bard can help in writing code1. When I last tested ChatGPT ([1], [2]), I saw significant gaps in this area. Thus, I am using this post to feed two birds with the same scone: add the needed support to generate images from code as well as test the AI capabilities of Bard and ChatGPT for writing code for a complex application.

What tool to use? 🔗

There are 3 systems (that I know of) which can be used to draw diagrams from code. First, I could use TikZ, a LaTeX package. I already need to use LaTeX for the math in this blog, so this would not be a big addition. Except, I cannot declare and use LaTeX environments with the support that is provided by default – although Pandoc seems to understand LaTeX in Markdown. I would need to extend the blog engine to process the LaTeX input separately, or at least gain a deeper understanding of the issue.

Another alternative is to use Graphviz. But that was quickly dropped. Although it allows me to have code for the diagram input, it requires using an external tool without a clean library, thus it’s not as easy to integrate it with the blog generator. Plus, output control is not as fine grained as TikZ or the next alternative.

The final alternative is to use diagrams. I still need

to extend the blog engine to support this, but this approach benefits from the

fact that I would be writing Haskell to interpret Haskell code to generate

stuff, a philosophy that is similar to the blog engine’s main

library as well.

Hence, in this post we’ll add support for diagrams. If I find a scenario

where TikZ would bridge a gap that diagrams cannot handle – mostly

concerned with text placement, to be honest –, I could employ a similar

solution to handle the TikZ pipeline.

Testing methodology and expectations 🔗

I know that developers of both ChatGPT and Bard have tried their best to produce useful models. I also acknowledge that the examples shown in social media and promotional articles have been cherry-picked. Hence, I want to test these engines by myself, using various approaches. I hope that by the end of several experiments such as these included in this article I will no longer hold these GPT tools wrong and I could gauge their real capabilities better. If the post is useful in improving these tools, that’s a nice bonus.

I know that both tools haven’t been trained on as much Haskell code as on

other mainstream languages. Thus, I would expect the performance to not be too

stellar, especially given that diagrams uses lenses in a significant

amount, relying a lot on the lens operators. Thus, I expect code errors that

are harder to understand – but I hope the AI assistants to be helpful in

parsing them –, as well as code that is not idiomatic.

Comparing the tools, I estimate that ChatGPT has seen at least 10 times more Haskell code, mostly given the fact that it was launched much earlier, at a time where most people wanted to test everything. Bard’s initial launch was a fiasco, so I doubt the same number of people wanted to test it on Haskell code.

My initial plan was too lay out most of the skeleton of this article before even opening the web-pages for the 2 AI systems. This would ensure that I can test the two systems with a minimal amount of bias, asking them similar questions.

However, as will become evident in the rest of the article, I took too long to finish writing it. Hence, I will update the article before publishing, updating several sections to test the AI systems with the latest updates. I will mention at the beginning of each section if the content is a week old – that is, part of the pre-experimental skeleton –, or is updated just now, before publishing.

For the first experiment, I planned to ask both ChatGPT and Bard a single question:

You are an experienced Haskell programmer. You have a blog written using Hakyll and want to add support for diagrams to it, using the

diagrams-liblibrary. How would you do that?

This is a wide open question, with minimal prompt engineering, just to see what the system outputs when not asked to reason step-by-step, when not given fine-grained prompts. The answers to this question are as given on Apr 21st, when I first started this.

My next step was to use the answer to the above to design – at a high-level

– what the solution should be. I was planning to start a fresh new chat –

clearing out all of the previous context–, and try to get each system to

write working code. End goal of this blog post was to extend the code

generator I am using to support generating diagrams specified in the

diagrams DSL.

Thus, the second LLM experiment involved going step by step, feeding questions one by one to the system, based on previous results and current state. I was planning to ask five types of questions:

- do a thing (e.g.,

Now, only do this action if input is of this type

) - fix an error (e.g.,

Code fails to compile with this error

) - ask documentation (e.g.,

What are the constructors of this type?

) - explain something (e.g.,

Explain what this code does

) - course corrections (e.g.,

Given this correct code, do this change

)

I’m including the last type of prompt only because I hope that this will guide the LLM towards correct behaviors faster than using multiple versions of the other 4 types of prompts. However, I intend to use this extremely rarely, only when the LLM is stuck or very wrong.

To limit the extent of the experiment, I am only giving each system up to 50 prompts to solve the problem. I will not post all of them and their outputs as that would make the blog post too long and not as useful. I will try to give feedback on every wrong output.

For each question Bard provides up to 3 drafts. I will look at each one of them and continue with the most promising one. This inserts some bias, but there is no option to switch to a different draft of a previous prompt once a new one was given. Hence, I have to judge the drafts, pick the one that seems to be closer to my goal and continue from there.

I am using the latest available versions as of today. For ChatGPT, I am using the latest free version (ChatGPT-3.5) without any plugins or integrations.

This being said, the original experiment following the above plan failed. Both

AI systems got stuck the moment I tried to bring in a library with a more

complex API (diagrams-builder). Since I wanted to have

support for diagrams by the time this post got published, I needed to

implement the solution myself. This took some time, during which it is likely

that the bots have been evolved. Since I also gained more experience on what

is possible, I decided to retry the step by step experiments, on a new Git

branch of the code, starting from the same point as on the original

experiment. This pollutes the experiment a little but ensures that the results

are the most of to date and are based on the actual desired outcomes.

Performance on the open question 🔗

Note: This section has not been updated since originally written as a skeleton. The prompt answers are the same as on Apr 21st.

As a reminder, for this section, I am testing both chat AI engines on this open ended question:

You are an experienced Haskell programmer. You have a blog written using Hakyll and want to add support for diagrams to it, using the

diagrams-liblibrary. How would you do that?

Both Bard and ChatGPT start by recommending steps to make sure diagrams-lib

is available to the build system. In one of the two drafts, Bard recommends

adding it to package.yaml for Stack setup based on hpack (though it is

recommending a version number which does not exist):

dependencies:

diagrams-lib: ^0.10.0.0The other Bard draft just says to use cabal install diagrams-lib.

Although this makes significant assumptions about the build environment, this

is something that works but not recommended if we want to integrate the

library into another application.

ChatGPT also recommends adding the diagrams-lib library as a dependency to

the project. It shows that it knows that there are two complementary build

tools, but only gives as an example how to solve this for Stack: add to

build-depends in stack.yaml. This is invalid syntax as that field does not

exist.

After this point, the two tools diverge in their output. The first Bard draft tries to give some example code to generate a demo diagram:

import Diagrams hiding (Diagram)

diagram = Diagram [

Node "A",

Node "B",

Edge "AB" (A, B)

]It looks like Bard is confused between diagrams-lib and Graphviz. There are

neither Node nor Edge constructors available in

diagrams. Plus, the Diagram constructor was hidden by the import

line (if it were available). So this code cannot compile as it is.

Bard continues the draft by stating I have to run render diagram.

This makes sense, except there is no function render (and no

binary with this name either). Again, it just looks like there is a confusion

between diagrams and Graphviz.

Next, Bard continues then to tell me that this would output this SVG:

<svg viewBox="0,0,100,100">

<circle cx="50" cy="50" r="10" />

<circle cx="100" cy="50" r="10" />

<line x1="50" y1="50" x2="100" y2="50" />

</svg>The SVG looks like 2 circles linked by a line, as you would expect to be the output if the input was a Graphviz file. But, it is missing node labels.

In the other draft, Bard tells me to import Diagrams module,

create a new directory diagrams in my blog engine’s source code, add a new

example.md file in that directory, write some diagram code in there, run

hakyll build to generate the blog post, and see it in a browser. This is

almost the flow I was looking for, but Bard does not give the details needed

to add support for this flow.

In this second draft, Bard also tries to give me an example on how to generate a simple Venn diagram. But, it fails in an exceptional way:

I really hope that the bug of adding empty code blocks gets fixed soon!

Let’s now look at ChatGPT. It also tells me to import three modules: one

module for the basic functionality of diagrams, and two modules for

rendering the input to image files (such as

Diagrams.Backend.PNG). Thus, it proves that ChatGPT has more

knowledge about the usage patterns of diagrams. However, this is not exactly

right, the [diagrams-cairo package][diagrams-cairo] has a different API,

the names of the modules that need importing is different.

After this, ChatGPT gives me an example on how to create a simple circle diagram, which is the first code sample that I see which also works:

import Diagrams.Prelude

myDiagram :: Diagram B

myDiagram = circle 1Of course, this is a literal reproduction of the first code sample in the

tutorial, but removing the main functions,

one import needed for the rendering and some language extensions.

Next, ChatGPT correctly tells me to use renderSVG from

diagrams-svg package to get a SVG file:

import Diagrams.Backend.SVG

main :: IO ()

main = renderSVG "output.svg" (Width 400) myDiagramFinally, ChatGPT tells me how to import this image into a markdown blog article input. Just like Bard, it tells me the expected flow, but it does not give me the steps needed to achieve this flow in an automatic way.

Overall, comparing the performance on the open-ended query, the two AI engines are almost tied. They both failed to answer the query I wanted but they also both provided steps in how to write Haskell code to generate a diagram to include in code – that is, they both explained the flow, but not how to automate it. ChatGPT provided more more correct code, but the provided code is also reproduced almost entirely from the tutorial.

With either of these tools, after correcting the errors hallucinated by the parrots, one could get a working solution. Which is why I am hoping that the step-by-step process in the next sections is going to solve my actual problem.

The desired flow 🔗

Note: This section has been updated based on learning experiences I got while implementing the solution manually.

Before using the AI bots to help write code to extend the blog engine, let’s take a closer look at what I want to achieve.

One approach is to use the manual workflow suggested by the bots: write the

diagram source code in a .hs file, compile it to generate a binary which

would produce the image file when run. Then, insert the diagram in the

markdown post, using the corresponding markdown syntax:

But this process is still too manual, not too different from the process where I need to use an external tool to draw the diagram. In fact, it is even slower, as I need to deal with errors, etc., when designing the diagram for the first time. Furthermore, it results in multiple binaries and I need to run the corresponding ones to regenerate the needed diagram, thus I’ll need a more complex system to manage these dependencies.

A way to automate the process is to place all these diagrams into certain

paths (i.e., a certain folder or give them a certain naming pattern), then

write Hakyll routing and Pandoc compilers to translate the .hs diagram input

code to the needed image. I would still need to insert the markdown link, just

as above, but the Pandoc compiler will be the one that generates the images,

as they are needed as inputs to the blog post. I could use

diagrams-builder to be able to pass a generic Haskell

file that contains the diagram code and generate the target image. This way, I

wouldn’t need to use multiple binaries.

Next step is to remove the need to write the input diagram code into a separate file. We can use a Pandoc fenced div to introduce the Haskell code used for the diagram directly in the input for the article, such as:

::: diagram

myDiagram :: Diagram B

myDiagram = circle 1

:::In this case, I only need to write a Pandoc filter that goes over the

Pandoc blocks, identifies the fenced divs, generated the image and

replaces the HTML <div> element with the corresponding image.

There is a problem, however. Although the fenced div is the recommended place to insert customization, it does not work for our case. This is because of the constructor for the (fenced) div:

data Block

= ...

| CodeBlock Attr Text

| Div Attr [Block]

| ...That is, a Div will contain a list of Blocks for all

the content inside the <div>, as they are parsed by Pandoc. Since we

want the contents of our fenced div to be treated as code, we must not apply

any formatting, we must use the inside contents verbatim. Thus, we need

to use code blocks, not divs.

Initially I wanted to do one more additional step. Because, in general,

diagrams may use common fragments to create larger images – which, in

my opinion, is easier to do with diagrams than with TikZ –, I was planning

to have 2 different custom code blocks: one to define common expressions and

another one to actually evaluate them to generate the final image. That is,

the following markdown sequence should have only drawn one single diagram, the

diagram-def block should not output anything:

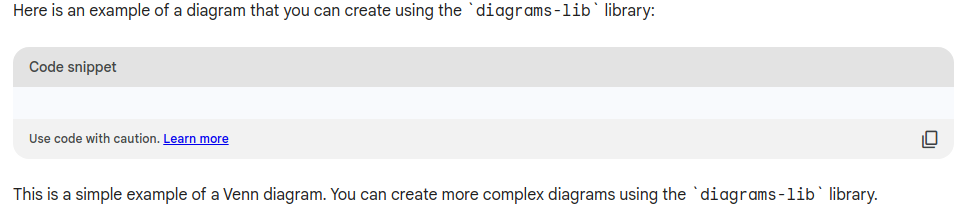

```diagram-def

circles = hcat (map circle [1..6])

```

```diagram-def

example = vcat (replicate 3 circles)

example

```If later in the article I use circles (without redefining it), it

should evaluate to the same concept. Basically, I wanted the blog engine to

act as a GHCi REPL session for the diagrams. This turned out to be taking much

more time than the upper bound I allocated for this article, so I decided

against doing this for now. Whenever I’ll decide to add support for code

interpretation in the future, I may also revisit this design point. For now,

the requirement is to draw diagrams in a single code block, where the diagram

expression to draw is given the name diagram. Thus, the above example should

be:

```diagram

circles = hcat (map circle [1..6])

diagram = vcat (replicate 3 circles)

```

It there are some circles drawn above, then the blog engine is able to draw diagrams as desired.

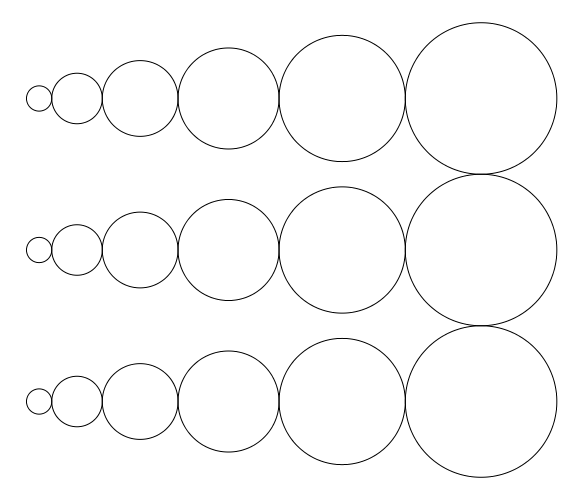

As another bonus, I wanted to only generate an image the first time its

expression is present in any of the blog posts. This can be done using

hashing of the code block (and diagrams-builder supports that). Hence,

repeating the above code would produce the same image:

There are possible rendering backends that I can choose from. Given that the

blog uses HTML5, initially I was thinking of using

diagrams-html5 to generate HTML5 directly, using the

<canvas> element. However, a combination of Nix and Haskell made this

impossible: one of the needed dependencies is currently broken. Next solution

was to use diagrams-svg) and generate SVG output. This

still results in a vector image. But there are issues regarding getting

alt-text for the SVG: there needs to be more work put in to make the generator

output an <img> together with a <figcaption> in a

<figure> element. Furthermore, if the SVG is not saved to a file,

each time the image code block is present again, it will be redrawn from

scratch, Hence, I decided to use diagrams-rasterific

to generate PNG files to include in the post as needed.

Thus, the outline of the changes I want to make to my blog engine is the following:

- add a new Pandoc filter to identify the custom code blocks

- for each custom block, use

diagrams-builderto interpret thediagramscode, generating PNG output - replace each custom block in the output HTML with the corresponding Pandoc

blocks to generate the expected

<figure>combination.

I’d like to get help from the LLM bots in implementing this task, with the outline given above. Let’s see how they perform.

Bard’s performance on step-by-step reasoning 🔗

Note: This section has been rewritten after the entire support for diagrams has been added to the blog post.

Let’s start with Bard, as this is the tool involved in the recent announcement. First, I’m resetting the chat, to remove all the previous questions from the context (I hope). The first question I’m asking is based on what is currently used by my blog generator:

You are an experienced Haskell programmer. Please explain to me what the following code using Hakyll and Pandoc does

blogCompiler :: Compiler (Item String) blogCompiler = pandocCompilerWithTransform readOptions writeOptions xforms where xforms = addSectionLink

A week ago, Bard failed to read the type of function correctly. In all three

drafts, Bard read the type of blogCompiler as being a function

from Item String to some Compiler (incomplete type!).

This time, though, in one of the drafts only, Bard understood the code

correctly – if we ignore the fact that it thinks the blog post source of

truth is a Haskell file instead of a Markdown one, and if we ignore that it

considers xforms to be a list:

Sure. The code you provided is a function called

blogCompilerthat takes no arguments and returns aCompiler. ACompileris a type in the Hakyll library that is used to compile a blog post from a Haskell source file to a static website. TheblogCompilerfunction uses thepandocCompilerWithTransformfunction from the Pandoc library to compile the blog post. ThepandocCompilerWithTransformfunction takes three arguments:

readOptions: A list of options that control how the blog post is read from the Haskell source file.writeOptions: A list of options that control how the blog post is written to the static website.xforms: A list of transformations that are applied to the blog post before it is written to the static website.The

blogCompilerfunction defines thexformsvariable to be a list that contains a single transformation, theaddSectionLinktransformation. TheaddSectionLinktransformation adds a link to the section headings in the blog post.

It has the two issues mentioned above but it also guessed that

addSectionLink transformation does. Using this draft, I ask it the

next question:

Given the above code, I want to add a new transform, called

addDiagrams. Can you write the new code for me?

In all 3 drafts, Bard added a new transform to xforms, but assumed

it is a list. So, instead of function composition, it tried to use list

operations:

-- Generated by Bard, incorrect

blogCompiler :: Compiler (Item String)

blogCompiler = pandocCompilerWithTransform readOptions writeOptions xforms

where

xforms = addSectionLink : addDiagramsIn the past experiment, in one of the drafts, Bard realised that the two

transformations are of the same type, so they cannot be linked simply with

:; Bard suggested addSectionLink : [ addDiagrams ].

But it did not do this, this time. In any case, this is a compile error, so

let’s ask Bard to fix.

Unfortunately, directly asking for a fix fails. If I give Bard only the error

message from the type mismatch on xforms in

blogCompiler, Bard suggests the exact code as above. If I give it

the error message from the line under where, Bard suggests the

worse [addSectionLink] : addDiagrams. When I point out that this

is still wrong, Bard cleverly replies with a correct code that no longer uses

addDiagrams.

If I hint Bard that it needs to deal with 2 functions, not lists, it finally produces (in 1 of the 3 drafts) correct code:

-- Generated by Bard, correct

blogCompiler :: Compiler (Item String)

blogCompiler = pandocCompilerWithTransform readOptions writeOptions xforms

where

xforms = addSectionLink . addDiagramsAt this point, we can progress to the next step. So, for question #8 I ask Bard the following:

Perfect. Now for

addDiagrams, let’s walk the Pandoc document doing something on code blocks and returning the other blocks unchanged

Unfortunately, Bard generates an expression of wrong type and contents:

-- Generated by Bard, incorrect

addDiagrams :: PandocTransform

addDiagrams = toPandoc $ \doc ->

let

diagrams = [

( "diagram1", "https://example.com/diagram1.png" ),

( "diagram2", "https://example.com/diagram2.png" )

]

in ...I have to ask Bard some documentation question:

What do the

walkandwalkMfunctions in Pandoc do?

Bard replies correctly in all 3 drafts. In one of them, it even gives me type

signatures and examples on how I could use them. So now I ask Bard to use

these to implement addDiagrams. Since I also told it what type I

expect for the function, Bard selects the correct walkM function,

although it still uses the hardcoded let as above. So, it is time

to do a course correction as question #13:

I’ll give you a hint. The function should start with

addDiagrams = walkM $ ...

In one of the drafts Bard produces the same wrong code, but in the other 2 it gets a better version:

-- Generated by Bard, not fully correct

addDiagrams = walkM $ \block ->

case block of

CodeBlock code -> do

let

diagram = diagrams !! 0

link = toLink diagram

in

return $ CodeBlock $ toString $ link

_ -> return blockIt lost the type signature (not a big issue) and it references an undefined

diagrams variable – but this is in code that we haven’t yet asked

Bard to write.

What’s bad, though, is that it has not matched the number of arguments of

CodeBlock. Pasting the error message and asking for some changes

to match the variable name with the expected type, by question #18 Bard

produces:

-- Generated by Bard, not fully correct

addDiagrams = walkM $ \block ->

case block of

CodeBlock attributes code -> do

let

diagram = diagrams !! 0

link = toLink diagram

in

return $ CodeBlock $ toString $ link { attributes = attributes }

_ -> return blockIn any case, the next item we need to do is to only work on blocks of code

that have "diagram" as part of the classes. So, question #19:

Can you change the code to only change the codeblock if “diagram” is in one of the classes in attributes?

Bard generates some code, but not fully correct. Focusing on the novel part,

we see it knows to return the original block on the else branch

(would be nice to merge with the other pattern of the case).

-- Generated by Bard, not fully correct, fragment

if "diagram" `elem` attributes then

return $ CodeBlock $ toString $ link { attributes = attributes }

else

return blockHowever, it does not realize that attributes actually stands for a

triple (and I’m ignoring the code in the then branch as that’s not

something we told Bard what to do).

After a few more course corrections and error fixing, Bard produces (as answer to the 24th prompt):

-- Generated by Bard, not fully correct, fragment

addDiagrams = walkM $ \block ->

case block of

CodeBlock (_, classes, attrs) code | "diagram" `elem` classes -> do

let

diagram = diagrams !! 0

link = toLink diagram

in

return $ CodeBlock $ toString $ link { attrs = attrs }

_ -> return blockQuestion #25 is

Replace the do block with a call to

compileDiagramthat takes as argumentsattrsandcodeand returnsIO Result.

Bard ignores this task in 2 drafts, but in the third it produces

-- Generated by Bard, not fully correct

addDiagrams = walkM $ \block ->

case block of

CodeBlock (_, classes, attrs) code | "diagram" `elem` classes -> do

link <- compileDiagram attrs code

return $ CodeBlock $ toString $ link { attrs = attrs }

_ -> return blockWe still need to change what code does with the result of

compileDiagram (i.e., link). But, first, we need to

make sure that the types on addDiagrams match.

By prompt #33 Bard generates

-- Generated by Bard, not fully correct

addDiagrams :: Pandoc -> Compiler Pandoc

addDiagrams = unsafeCompiler . walkM $ \case

CodeBlock as@(_, classes, attrs) src | "diagram" `elem` classes -> do

let

diagram = Diagrams.Backend.Pandoc.compileDiagram src

in

setAttr attrs "href" $ toString $ diagram { attrs = attrs }

block -> return blockNow, we only need to fix the usage of compileDiagram (Bard picked

a function which does not exist) and then we need to implement it. By question

#40, with a number of course corrections and splitting the tasks into very

fine grained demands, Bard gets to

-- Generated by Bard, not fully correct

addDiagrams :: Pandoc -> Compiler Pandoc

addDiagrams = unsafeCompiler . walkM \case

CodeBlock as@(_, classes, attrs) src | "diagram" `elem` classes ->

compileDiagram attrs src >>= \case

Failure err -> return $ Div ("", ["error"], []) [Plain [Str err]]

Success img ->

return $ Para [Img src]

block -> return blockIt is clear that Bard does not understand Pandoc blocks and

attributes. I gave it what to write in the Failure

case and hints about what I want for the Success case but it

failed to use the proper construct.

However, this is already more progress than what I got a week ago in 50 questions! It seems Bard has improved considerably in just 1 single week!.

Let’s use the remaining 10 questions to test Bard’s capabilities on the

diagrams-builder API. Unfortunately, Bard hallucinates

the API, even when provided link to the documentation itself. It also does

not understand the lens-based API, so I have to heavily course correct it. By

the 50th prompt I get

-- Generated by Bard, not fully correct

compileDiagram :: [(Text, Text)] -> Text -> IO CDResult

compileDiagram as src = buildDiagram (diagramDef as src) >>= \result -> do

case result of

ParseErr error -> return $ Failure error

InterpErr error -> return $ Failure error

Skipped _ -> return $ Success ""

OK hash result -> return $ Success hashThis is almost ok, the interpreter error needs to be pretty-printed and on the success cases it needs to return a path to an image file.

Overall, I asked Bard 20 times to do something, 9 times to fix an error, 5 times to document an API, 4 times to explain what some code does and 12 times to course correct. Compared to last week where there were only 2 course corrections and 26 error corrections, this time we got farther ahead.

Note that all of this is after I knew what code I needed to write. When I tested Bard a week ago, before looking at the documentation and trying to implement this myself, it did not progress at all past what is now question #24 above!

ChatGPT’s performance on step-by-step reasoning 🔗

Note: This section has been rewritten after the entire support for diagrams has been added to the blog post.

Moving now to ChatGPT, there is a decision to take: I could continue developing the code from where Bard left of – thus, increasing chances of getting to working code by the end of the experiment –, or I could start from scratch – thus, testing ChatGPT in the same scenario as Bard. In the end, I decided to go with the second approach.

Just like in the Bard scenario, I am starting a new chat, using the following prompt:

You are an experienced Haskell programmer. Please explain to me what the following code using Hakyll and Pandoc does

blogCompiler :: Compiler (Item String) blogCompiler = pandocCompilerWithTransform readOptions writeOptions xforms where xforms = addSectionLink

ChatGPT said that blogCompiler is a no arguments function

producing a Compiler which produces an Item that

contains a String (weird). It says that Item

represents a file (not exactly correct) and that Compiler compiles

input files to output files (trivial, but does not explain what are these

files).

However, it correctly identified that the transformation from

addSectionLink is just a function, not a list.

Just like in the Bard scenario, the next question is

Given the above code, I want to add a new transform, called

addDiagrams. Can you write the new code for me?

While a week ago ChatGPT identified that I need to do a function composition,

this time it generated code using <> which I had to verify to see

whether it is correct or not:

-- Generated by ChatGPT, accidentally correct

import Text.Pandoc.Options

import Hakyll.Web.Pandoc

blogCompiler :: Compiler (Item String)

blogCompiler = pandocCompilerWithTransform readOptions writeOptions xforms

where

xforms = addSectionLink <> addDiagrams

addDiagrams :: Pandoc -> Pandoc

addDiagrams = undefined -- TODO: implement your custom transformation hereMoment of truth: using <> is not correct in general, but works

here because Pandoc is an instance of Semigroup.

Furthermore, it is nice that ChatGPT added the required imports and generated

a stub for addDiagrams – Bard wanted to generate the code for

this new function always and then got confused there.

The <> operator does not work if addDiagrams is not a

pure transformation from Pandoc to Pandoc – which it

is not in the manually written implementation. If I ask ChatGPT tomake

addDiagrams return a Compiler Pandoc, it only changes

that function:

-- Generated by ChatGPT, no longer correct

import Text.Pandoc.Options

import Hakyll.Web.Pandoc

blogCompiler :: Compiler (Item String)

blogCompiler = pandocCompilerWithTransform readOptions writeOptions xforms

where

xforms = addDiagrams <> addSectionLink

addDiagrams :: Pandoc -> Compiler Pandoc

addDiagrams pandoc = do

-- TODO: implement your custom transformation here

return pandocWhen I ask ChatGPT to fix the compile error, it first tries to change the type

of addSectionLink. Trying again, at prompt #10 it finally

generates the following stub:

-- Generated by ChatGPT, almost correct but also over complicated

import Control.Monad ((<=<))

import Text.Pandoc.Options

import Hakyll.Web.Pandoc

blogCompiler :: Compiler (Item String)

blogCompiler = pandocCompilerWithTransform readOptions writeOptions xform

where

xform = addDiagrams <=< pure . addSectionLink

addDiagrams :: Pandoc -> Compiler Pandoc

addDiagrams pandoc = undefined -- TODO: implement your custom transformation here

addSectionLink :: Pandoc -> Pandoc

addSectionLink pandoc = undefined -- TODO: implement your custom transformation hereAlthough I told it to not change addSectionLink, that is still

included in the code.

When I ask ChatGPT if function composition could be used in xform

it wrongly replies:

No,

addDiagrams . addSectionLinkwouldn’t work because the types don’t match up.addSectionLinkhas the typePandoc -> Pandoc, which means it takes aPandocand returns aPandoc. On the other hand,addDiagramshas the typePandoc -> Compiler Pandoc, which means it takes aPandocand returns aCompiler Pandoc.To compose

addDiagramsandaddSectionLink, you need to find a way to convert thePandocreturned byaddSectionLinkto aCompiler Pandocso that it can be fed intoaddDiagrams. This is what we did withpure . addSectionLinkin the previous example.

First paragraph is correct, second one has the logic reversed :( I need to tell it that it can actually do it:

You can combine them. The LHS of

.returns aPandocand the RHS wants aPandocto return aCompiler Pandoc.

But there is still one error due to the use of

pandocCompilerWithTransform instead of

pandocCompilerWithTransformM.

At this point, the only way to make progress is to course correct and then ask

it to fill in addDiagrams. I need to tell it to use

walkM and then it generates (prompt #21):

-- Generated by ChatGPT, correct code

addDiagrams :: Pandoc -> Compiler Pandoc

addDiagrams = walkM addDiagramsBlock

where

addDiagramsBlock :: Block -> Compiler Block

addDiagramsBlock (CodeBlock attrs code) =

-- do something with the code block here

return $ CodeBlock attrs ("Modified: " ++ code)

addDiagramsBlock block = return blockWhile this is later than Bard, it used the correct number of arguments from

the start. It even knows to use LambdaCase extension!!

-- Generated by ChatGPT, correct code

addDiagrams :: Pandoc -> Compiler Pandoc

addDiagrams = walkM $ \case

CodeBlock (id', classes, namevals) contents -> do

let newContents = "New contents"

return $ CodeBlock (id', classes, namevals) newContents

block -> return blockJust like in the Bard case, now I ask it

Can you change the code to only change the codeblock if “diagram” is in one of the classes?

And it generates the correct code for this state:

-- Generated by ChatGPT, correct code

addDiagrams :: Pandoc -> Compiler Pandoc

addDiagrams = walkM $ \case

CodeBlock (id', classes, namevals) contents

| "diagram" `elem` classes -> do

let newContents = "New contents"

return $ CodeBlock (id', classes, namevals) newContents

block -> return blockFor prompt #23 I repeat prompt #25 from the Bard example:

Replace the do block with a call to

compileDiagramthat takes as argumentsattrsandcodeand returnsIO Result.

ChatGPT surprises me by returning an error as needed:

-- Generated by ChatGPT

addDiagrams :: Pandoc -> Compiler Pandoc

addDiagrams = walkM $ \case

CodeBlock (id', classes, namevals) contents

| "diagram" `elem` classes -> do

result <- unsafeCompiler $ compileDiagram namevals contents

case result of

Left err -> throwError $ "Error compiling diagram: " ++ err

Right svg -> return $ RawBlock "html" svg

block -> return blockWhat is more surprinsing is that is inserts the correct call to

unsafeCompiler. Though, it assumes result is an

Either String SVG.

When I tell it to move unsafeCompiler to the start of the

function, it generates

-- Generated by ChatGPT

addDiagrams :: Pandoc -> Compiler Pandoc

addDiagrams = unsafeCompiler . walkM changeCodeBlock

where

changeCodeBlock :: Block -> IO Block

changeCodeBlock (CodeBlock (id', classes, namevals) code) =

case "diagram" `elem` classes of

True -> compileDiagram namevals code >>= \case

Right imgPath -> return $ Para [Image (id', [], []) [] (imgPath, "")]

Left e -> throwIO e

False -> return $ CodeBlock (id', classes, namevals) code

changeCodeBlock x = return x

compileDiagram :: [(Text, Text)] -> Text -> IO (Either SomeException FilePath)

compileDiagram attrs code =

-- Implementation omitted for brevity

-- should return Either SomeException FilePath

undefinedWe are on prompt #25 with the same result we got on prompt #40 with Bard. This matches the experience of last week where it took 16 questions to reach what it took Bard 30 prompts – this time we have fewer questions as I learned to ask more relevant questions, I think.

It is now time to fill in the code in compileDiagram:

As an expert Haskell programmer, how would you use

diagrams-builderto fill incompileDiagram? You may usediagrams-builder,diagrams-core, and otherdiagrams-*libraries.

The answer is confusing SVG and Rasterific backends. I

need to keep feeding it more and more parts of the hand-written code but by

question #50 I get:

-- Generated by ChatGPT

import qualified Codec.Picture.Png as Png

import qualified Data.ByteString.Lazy as BS

compileDiagram :: [(Text, Text)] -> Text -> IO CDResult

compileDiagram as src = buildDiagram (diagramDef as src) >>= \case

-- failures

ParseErr err -> do

hPutStrLn stderr ("parse error: " ++ err)

return $ Failure "Parse error while compiling diagram."

InterpErr err -> do

hPutStrLn stderr ("interpreter error: " ++ err)

return $ Failure "Error while compiling diagram."

-- success

Skipped h -> do

let pngPath = "diagrams/" ++ T.unpack h ++ ".png"

exists <- doesFileExist pngPath

if exists

then return $ Success $ T.pack $ ""

else return $ Failure "Expected compiled diagram file not found."

OK h dia -> do

let pngPath = "diagrams/" ++ T.unpack h ++ ".png"

diaSize = mkSizeSpec2D (Just 400) (Just 300)

Png.writePng pngPath $ Png.encodePng $ renderDia Rasterific diaSize dia

return $ Success $ T.pack $ ""This is similar to Bard’s results, doing slightly more. It write the output diagram to a file and it reads that file from the disk if it was already generated. It took much longer to reach this point.

Overall, I asked ChatGPT 17 times to do something, 9 times to fix an error, 5

times to explain what some code does and 19 times to course correct. I learned

from last week that it cannot explain the diagrams-* APIs, it seems that was

not included in its training data or is too weird.

Last week, I saw one more strange behavior from ChatGPT: when it got very confused, it claimed that an internal error occurred and I had to refresh the page and start the chats again. This has not happened now.

Conclusions 🔗

Note: This section has been rewritten just before publishing the article. It takes into account the experiments from last week and from this weekend.

Neither ChatGPT not Bard were able to write the entirety of the code for me. I still needed to split questions into very small snippets, going step by step. This is after writing the code myself, so the course corrections questions – many more than when I first ran this experiment a week ago –, are giving significant hints to the solution that I chose. Both AI bots were able to complete the code.

Both AI tools got stuck the moment I tried to bring in a library with a more

complex API. The buildDiagram function

takes a single BuildOpts b v n as input and has a large number of

constraints on the type variables. To build the argument, one would start from

an empty set of options (via mkBuildOpts) and then add to them

using specific lenses. I am not sure how much training data with this type of

APIs has been present in the training data that these LLMs learned from and I

am giving them a passing grade for the fact that the lens errors are not

easily readable; these errors are hard to fix even for moderately experienced

Haskell programmers! However, if I am to compare these tools, Bard seems more

open to moving out of a local minima than ChatGPT: it only took 10 questions

to produce valid diagrams producing code, compared to 25 for ChatGPT!

Overall, ChatGPT consistently provided valid Haskell looking code most of the

time, modulo type errors and using non-existent APIs, whereas Bard from a week

ago was confused between generating Python and Haskell code, even though

Python was not used at all in any of the prompts. Bard was also confused

between the diagrams and Graphviz DSLs, something I have not seen on

ChatGPT. Bard from this weekend did not have this problem, though, producing

valid Haskell code most of the time. I think this is indicative of more

training having occured during this time.

UX wise, Bard produced answers faster and had drafts from which to choose, thus increasing the chances that one answer was correct, or easier to fix. Sure, this requires consulting the documentation / testing the code individually, but it makes Bard be better for a pair programming assistant.

Another benefit of Bard is the fact that it had internet access. So I could point it to a Hackage page and ask it to explain a certain type and in some cases it would provide useful results. Though by this time I could have also read the documentation from that page directly.

One downside of ChatGPT is that spending significant amounts of time outside of the ChatGPT browser tab makes it produce an error message on the next prompt, requiring restart. Since I was taking notes for this article and testing the code in parallel, this resulted in more slowdown.

In the end, we must recall that the task that I asked the bots to solve is a very complex problem. There is hope that future generations of these tools will be more capable, especially if they are trained on more Haskell data. I saw a lot of examples on popular languages and cherry-picked samples on less popular languages, but when you try to actually use these tools to do work in these languages the tools are not so useful anymore.

I will continue testing these LLMs. They will improve, I will get better at prompting.

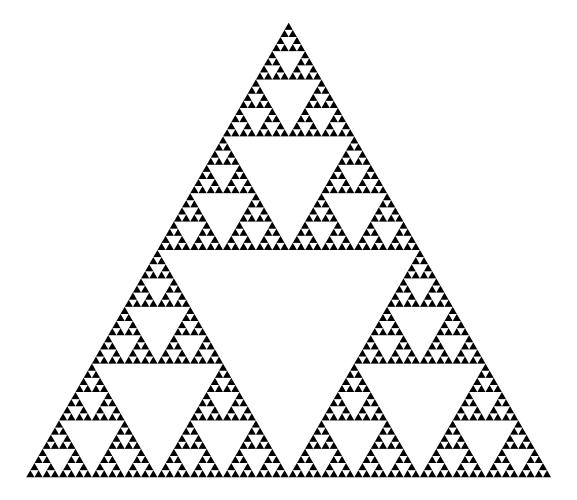

To test that we now have the full solution, after writing the code myself, let’s draw a trivial fractal:

```{.diagram width=500 caption="Drawing a Sierpinski fractal"}

sierpinski 1 = eqTriangle 1

sierpinski n = s

===

(s ||| s) # centerX

where s = sierpinski (n-1)

diagram = sierpinski 7 # center # lw none # fc black

```

All good! Now I can continue exploring VDGF.

Initially, I was planning to release this article the weekend following the announcement. But the AI assistance was not too helpful, so I had to do the needed code changes manually. During the week I couldn’t do much work on the blog engine, so I am releasing this a week later than desired.↩︎

References:

- Tristan Needham, Visual Differential Geometry and Forms: A Mathematical Drama in Five Acts – Princeton University Press, 2021 https://doi.org/10.2307/j.ctv1cmsmx5

Comments:

There are 0 comments (add more):